In a recent article in the Archives of Neurology, a team of British and Belgian neuroscientists describe a clinically unconscious accident victim who can, on command, imagine herself playing tennis and walking around her house. By showing that her functional brain imaging studies (fMRI) are indistinguishable from those of healthy volunteers performing the same mental tasks, the researchers claim that the young woman's fMRI "confirmed beyond any doubt that she was consciously aware of herself and her surroundings, and was willfully following instructions given to her, despite her diagnosis of a vegetative state."

Their extraordinary conclusions are beyond provocative; they raise profound questions about the very notion of consciousness. What's more, they could throw thousands of families and doctors into utter turmoil. As with the Terri Schiavo controversy, patient advocacy groups, self-serving lawyers and politicians with personal agendas could use the study's stamp of certainty as a given.

Yet the study's conclusions are not beyond a doubt. There are plenty of questions about whether this young woman is conscious and capable of choice.

Let's briefly look at the study. In mid-2005, a 23-year-old woman sustained massive head injuries in an auto accident. Following multiple brain surgeries and five months of rehabilitation attempts, she remained unresponsive. According to her treating physicians, she could open her eyes but could not respond to any commands; she could not voluntarily look in the direction of a voice; there was no evidence of orientation or emotional response. They determined that she was in a permanent vegetative state -- a neurological categorization of patients who emerge from coma, appear to be awake, but show no signs of awareness of self or environment.

Before the recent advances in functional brain imaging, most neurologists, based upon their bedside observations and brain wave studies, would have agreed that the woman, though "awake," was extremely unlikely to have a significant private mental life -- either in terms of personal awareness or willful mental activity. (This failure to differentiate between awake and aware was a major feature of the Schiavo affair.) But new tools bring new opportunities; her doctors wondered if the fMRI could provide additional understanding of the clinically unresponsive brain. What if the fMRI could demonstrate residual consciousness and self-awareness, perhaps even the ability to respond to commands?

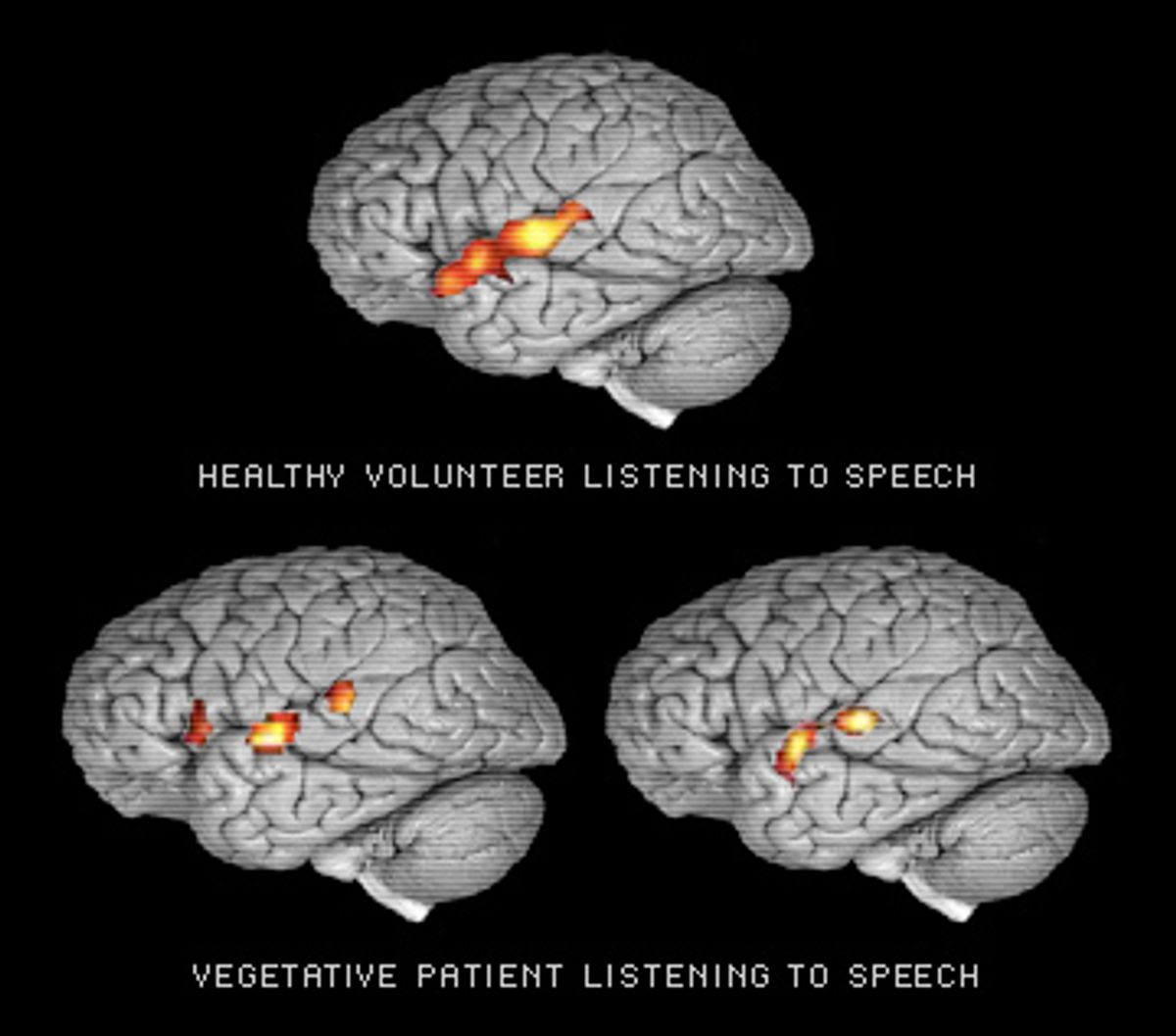

Their proposed study was quite simple. While inside an fMRI scanner, the unresponsive woman was asked by the researchers to perform three mental tests: relax, imagine playing tennis, and imagine walking around the various rooms in her home. The tasks were chosen because of their ability to activate different areas of the brain. Imagining playing tennis would light up the supplementary motor area, a region involved in imagining as well as performing coordinated movements. In contrast, imagining moving from room to room in a house activated those regions, such as the posterior parietal lobe, that contribute to imaginary or real spatial navigation.

At first glance, the results are startling. The patient was able to activate the same general brain regions as conscious volunteers serving as controls in the test; according to the authors, the images were statistically indistinguishable.

But are the authors' conclusions justified? Should we accept that the patient really was consciously aware and able "to understand instructions, carry out different mental tasks, and exhibit willed voluntary behavior in the absence of any overt action."

Traditionally, consciousness has been considered a subjective and private experience. Unless we subscribe to Las Vegas versions of telepathy, we are dependent upon what a person's mind tells us about what that mind is doing. We ask someone what they are thinking or how they are feeling; if they don't answer, we are left guessing at the meaning of sighs, grunts and bits of body language and gesture.

Are we to now believe that an fMRI can tell us the level and nature of a patient's consciousness even when the patient can't respond? Putting aside for a moment the very considerable questions of fMRI methodology, and interpretation, are we ready to accept technology as the final word in assessing mental states?

This is not simply an academic question applicable to a single patient. Tens of thousands of patients in a persistent vegetative state linger in long-term care facilities. Others remain under the radar, being cared for at home by their families. The estimated annual cost of medical treatment for them is between $1 billion and $7 billion a year. Once larger numbers of patients are evaluated via fMRI, it is quite likely that we will find others with similar degrees of activation on a variety of mental tasks. Family members will be asked to understand, interpret and act on the scan reports. I cannot imagine a worse medical nightmare than being told that a clinically unconscious spouse or child has been shown on fMRI to have an active imagination and substantial self-awareness, especially when the findings don't alter the grim prognosis or substantiate the value of greater rehabilitative efforts. Before putting a family through such agonizing dilemmas, we neurologists should be reasonably certain that what the fMRI shows does correspond to actual mental states in the seemingly unconscious.

But how could we make such a determination? How can you objectively confirm what is, at bottom, a subjective experience?

Stymied, I decided to take a step back and review what the fMRI has shown in other states of diminished consciousness. But to understand what we see on such scans, we need to first look at how the brain processes information. For a quick example, consider how a visual image is formed and projected into consciousness.

Your retina detects a yellow-and-black fluttering. The information is sent to the primary visual cortex. Specialized collections of neurons (modules) process different aspects of vision, such as the detection of vertical or horizontal motion, lines and edges, determination of color. The output of these modules then flows into higher order networks -- first within the visual association areas, and then into more widely distributed circuitry for non-visual elements, such as the remembrance of seeing a similar pattern hovering over a mountain lake, a trip to a natural science museum with your grandfather, the cover of a book on chaos theory, a scary scene from "Silence of the Lambs." Finally, through mechanisms both utterly mysterious and widely distributed throughout the brain, this unconsciously constructed perception is delivered into awareness; you suddenly "see" a monarch butterfly floating in front of you.

If we were to watch this process on fMRI, we would see activation of the primary visual cortex, secondary visual association areas, as well as more widely distributed non-visual areas that contribute to your understanding of and feelings toward a butterfly. But we would not see the image's transition from unconscious to conscious perception. Consciousness isn't generated by a specific brain area that can be directly visualized. The fMRI can show us the precursors of a perception: It cannot tell us if the person is actually aware of that perception.

To put this into everyday terms, imagine your morning commute. You settle into your car, turn on the news and you're immediately immersed in the latest presidential scandal. In what seems only seconds later, you find yourself at work. You have no specific awareness of having made the 10-mile drive. Nevertheless, if you had been wearing a portable fMRI helmet, your scan would have shown various areas of brain lighting up as your brain successively processed a wide variety of inputs that you may or may not remember having heard or seen. You may consider making turns or applying the brakes as intentional actions, but in fact your unconscious mind was making intentional and deliberate choices while the conscious "you" was on autopilot.

When judging a patient's degree of personal awareness and ability to make willful decisions, it's important to distinguish between choices that can only be made consciously (such as consistently choosing to blink once for yes or twice for no) and choices that might be more reflexive and occur outside of consciousness. In short, we need to know if a choice is truly deliberate or merely the unconscious activation of neural circuitry.

So is it possible that this young woman's fMRI scans are not reflective of conscious intention and choice? Maybe her injury destroyed her ability to make unconscious thoughts conscious, but left her auditory pathways and subconscious processing mechanisms sufficiently intact to "hear" and respond to the researchers' questions.

Although it's too early to draw definitive conclusions, several recent fMRI studies point in this direction. In the March 2007 Journal of Neurology, Chinese researchers have studied the ability of unconscious patients to recognize their own names. Of seven persistent vegetative patients presented with a familiar voice saying their name (the same technique used when asking unconscious young women to relax), three showed activation of the primary auditory cortex and two also activated higher order association areas in the temporal lobe. So, are we to conclude that such patients are secretly conscious, or are they merely unconsciously registering recognition of their own names? And how would we know?

Imagine being at a cocktail party. You are intently talking to one person; all other conversations are mercifully tuned out. Suddenly you are aware that someone across the room has mentioned your name. Neural circuitry previously trained to recognize your name did what it was trained to do; the conscious "you" neither willed this activation nor asked to be notified when your name came up. Seen in this light, the Chinese researchers elegantly demonstrate how extensive areas of brain specific for language processing can be activated in unconscious patients. The study does not tell us whether these patients were actually aware of hearing their names, nor does it tell us that this processing was consciously willed.

These studies may be the earliest fMRI demonstrations of how much cognition actually occurs at a purely unconscious level. Indeed, Nicholas Schiff of Cornell Medical School, who specializes in disorders of impaired consciousness, has repeatedly cautioned that preservation of isolated neural networks (like name recognition) shouldn't be construed as representing conscious awareness; rather, such activity might be seen as evidence for parts of the brain operating outside of awareness.

Back to the young woman imagining playing tennis. Since the appearance of the preliminary version of the study in Science in 2006, there has been a flurry of criticisms, ranging from how to construct a study that can separate out conscious choice from more automatic unconscious processing, to the larger question of whether the fMRI is capable of confirming the presence of consciousness. The authors haven't budged.

On his Web site, the lead author, Cambridge neuroscientist Adrian Owen, has expanded his conclusion by suggesting that the performance of these mental tasks might be a way that non-communicative patients "may be able to use their residual cognitive capabilities to communicate their thoughts to those around them by modulating their own neural activity." Imagine being the young woman's parent and reading that just maybe your unconscious daughter could communicate with you, if only science could perfect the proper techniques.

The dream of technology clarifying personal experience carries a huge moral burden. I've lived through the era when the electro-encephalogram (EEG) was correlated with everything from borderline personality to impulsive, even homicidal behavior. In criminal trials, EEGs were commonly flaunted as evidence for diminished capacity. Patients without clinical seizures were treated with major league anticonvulsants simply because they had minor brain-wave irregularities. With time and experience, enthusiasm for the specificity of EEGs subsided; the neurological community learned that a wide variety of "irregular" findings might be nothing more than the far ends of the distribution of normal findings.

Now the new fair-haired kid on the neurological block is the fMRI. The underlying principle of fMRIs is fairly straightforward. Areas of brain that are active need more oxygen; this increased oxygen use is reflected as hot spots on the scan. But fMRI accuracy, reliability and interpretations remain debatable, especially when it comes to studying complex mental activities with relatively primitive and indirect measurements of blood flow. At best, the fMRI is the physiological equivalent of an aerial photograph of a house. It can tell you which rooms are lighted, even the amount of electricity being used in any room, but it cannot tell you what is going on in a lighted room, or even if anyone is home and is aware of the light.

Despite numerous discussions of technical limitations -- the April 2005 Scientific American article "Fact or Phrenology" is an excellent overview -- the fMRI has captured the popular imagination big time. In 2006, there were 1,500 fMRI articles in professional journals. Some studies have been meticulous and provide superb insights into brain function. Others have been trivial, if not downright silly, such as why consumers might prefer Coke to Pepsi, or how fMRI can conclusively determine if you are lying. There are those that border on science fiction, such as German neuroscientist John-Dylan Haynes' suggestion that one day the computer should be able to read minds. "Every thought is associated with a characteristic pattern of activation in the brain. By training a computer to recognize these patterns, it becomes possible to read a person's thoughts from patterns of their cerebral activity."

My primary concern isn't with the variable quality of fMRI studies; we are all aware that science proceeds via fits and starts, the good and the bad sorted out over time. For me, the problem is one of ethical responsibility to patients and their families. And there is the issue of social responsibility. Science doesn't exist in a vacuum; I would hate to see such preliminary and controversial studies cited as evidence for otherwise untenable or extreme social policies.

Perhaps Owen and his colleagues are correct and this unfortunate woman has fleeting moments of consciousness and is trying to communicate her thoughts. But perhaps the study shows nothing more than preserved bits of neural machinery capable of making unconscious choices. There cannot be a definitive conclusion until we understand every aspect of brain function and have demonstrated precisely what neural states correspond to specific conscious experiences. Until this mythical time arrives, pronouncements of fMRI confirmation of consciousness aren't science; they are bald speculations and should be so labeled.

Scientists dealing with human lives have the obligation to heed the Hippocratic Oath: primum non nocerum. Above all, do no harm. The study by Owen and co. provides provocative insights into the degree of residual cognitive processes that can be preserved in a clinically unconscious patient. That is enough. Whether this unfortunate young woman has elements of consciousness lurking beneath her unresponsive behavior is not a question that can be answered through technology.

Shares