“GMA,” said the woman answering the phone on the opposite coast. The acronym was unfamiliar, so it took me a moment to realize that I had the right number for "Good Morning America" and was calling the correct person back from the popular ABC television show.

Months before, I had written an item on my blog about the existence of online cheating videos, created by high school and college students, that demonstrate elaborate techniques designed to boost examination scores.

The videos show, step by step, how to create fake drink bottle labels in Photoshop to hide formulae, how to stuff pen shafts with answer scrolls, and how to write detailed foreign-language conjugations on stretched-out rubber bands. Most of the videos had low production values and were shot in informal domestic settings, but at least one Japanese video borrowed the actual form of commercial distance learning, with distinct chapters on the subject of cheating; the lessons were elaborated with slick information graphics and computer-generated animation to enhance the instructional content.

My story about these academic dishonesty videos on YouTube had been picked up by a blog for the Chronicle of Higher Education. Then a reporter from the Shreveport Times interviewed me and ran an article about the subject, which later appeared in the large-circulation paper the Chicago Sun-Times. From there, the story reached researchers at "Good Morning America" who thought it had the right kind of national, light-news appeal for early morning mainstream audiences. Although I am an academic, "Good Morning America" apparently hoped that I might be an adequately colorful commentator for the show and asked me to speak to the phenomenon of this new kind of online dishonesty.

I thought the cheating videos were interesting because they demonstrated an argument that I had been developing over the course of the past decade. First, with regard to everyday practices and long-term goals, formal institutions of codified pedagogy, represented by universities, were increasingly in conflict with individuals who were informally self-taught. Second, access to and use of computational media frequently seemed to exacerbate this tension. I thought this situation was particularly unfortunate in the era of socially networked computing, when one would hope that academic and popular forms of instruction would be converging to work in concert, thereby supporting a life-long culture of inquiry, collective intelligence, and distributed research practices. Certainly, the students in the videos were sharing tips and performing online knowledge-networking activities that constituted of a form of real learning, even if such learning would be considered objectionable by their professors who would, understandably, regard the content of the videos as being fundamentally in violation of the scholarly social contract.

In these cheating videos, the students demonstrated that they understood the procedures of their own educational institutions and had learned a different approach to the sequences of operations involved in the minutiae of test-taking—an approach that might guarantee higher scoring success. They had achieved mastery of something that seemed relevant to success in the university, but it wasn’t what their professors wanted them to have learned.

In addressing the hundreds of thousands who watch such videos, students aren’t the only ones in the implied audience. These videos appeal to many nonacademic viewers who enjoy watching, from a remove, the hacking of obstreperous or powerful systems as demonstrated in videos about, for instance, fooling electronic voting booths, hacking vending machines, opening locked cars with tennis balls, or smuggling contraband goods through airport x-ray devices. These cheating videos also belonged to a broader category of YouTube videos for do-it-yourself (DIY) enthusiasts— those who liked to see step-by-step execution of a project from start to finish. YouTube videos about crafts, cooking, carpentry, decorating, computer programming, and installing consumer technologies all follow this same basic format, and popular magazines like Make have capitalized on this sub-culture of avid project-based participants. Although these cultural practices may seem like a relatively new trend, one could look at DIY culture as part of a longer tradition of exercises devoted to imitatio, or the art of copying master works, which have been central to instruction for centuries.

Unfortunately, by the time the production offices for "Good Morning America" called my university office months after my initial blog posting, the story of these students’ acts of ingenuity had become transformed by the producers into lurid evidence for yet another Internet moral panic.

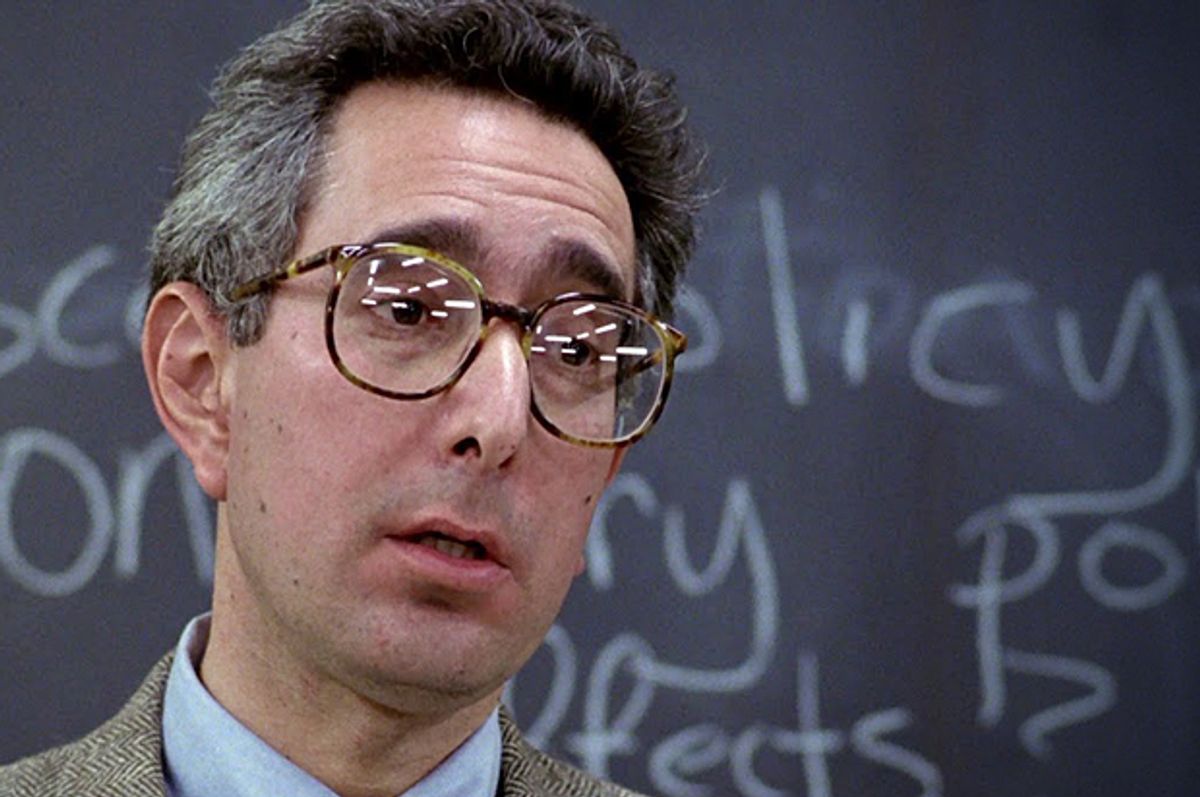

Somewhere between the predator panic—when the news seemed to be all about online sexual deviants preying on innocent young people—and the bullying panic over the cruelty of the young themselves toward their peers, there were a variety of miscellaneous panics being aired on national television with horrified glee. My conversation with the GMA staffer quickly became strained. The show obviously wanted a voice of moral outrage to defend tradition and condemn the lax values of the young from the elevated position of a stereotypically highbrow college professor. Several times I was prompted to say how reprehensible I must think the behavior of the video makers was. At the same time, the woman from ABC was unable to suppress her voyeuristic pleasure in having lined up two of the actual video-producing student perpetrators so they could be paraded before the public in their unrepentant condition.

Ultimately, the episode of "Good Morning America" aired on December 10, 2008, without me. The show included a segment with the title “High-Tech Cheating” that featured New York Times columnist Randy Cohen of “The Ethicist.” Cohen was interested in the ironic mixture of exhibitionism and anonymity that these YouTube content creators valued, and—like me—he recognized the how-to genre being imitated by these aspiring YouTube stars.

I was astonished at how skillfully they mimicked the form of the how-to video. They all had scores; they had soundtracks; they have opening credits; they have closing credits. … What was more striking about this than it being a record of their cheating was it being their desire I think to be television producers or television stars.

For Cohen, the students were primarily actors rather than educators; their frame of reference wasn’t subverting educational institutions, it was emulating media organizations. Indeed, since many of the videos began with a disclaimer that cheating tactics were being shown “for entertainment purposes only,” Cohen had good reasons to view the videos from this perspective.

Yet a number of university researchers have argued that cheating and learning are intimately connected in the digital era and that subverting a complex system is often the best way to understand its rules. Mizuko Ito and her team of MacArthur Foundation–funded researchers have studied a continuum of teen behaviors around knowledge transfer—from trading gossip to “geeking out” over shared enthusiasms for highly specialized topics. Ito’s group has noted that these kinds of informal educational practices are closely related to the widespread adoption of social networks and video games. As Ito and her collaborators explain, “access to cheats and other secondary gaming texts was common among kids.”

Prior to the release of this report, Mia Consalvo had argued that cheating in video games is expected behavior among players and that cheaters perform important epistemological work by sharing information about easy solutions on message boards, forums, and other venues for collaborations.

Consalvo also builds on the work of literacy theorist James Paul Gee, who asserts that video game narratives often require transgression to gain knowledge and that, just as passive obedience rarely produces insight in real classrooms, testing boundaries by disobeying the instructions of authority figures can be the best way to learn. Because procedural culture is ubiquitous, however, Ian Bogost has insisted that defying rules and confronting the persuasive powers of certain architectures of control only brings other kinds of rules into play, since we can never really get outside of ideology and act as truly free agents, even when supposedly gaming the system.

Ironically, more traditional ideas about fair play might block key paths to upward mobility and success in certain high-tech careers. For example, Betsy DiSalvo and Amy Bruckman, who have studied Atlanta-area African-American teens involved in service learning projects with game companies, argue that the conflict between the students’ own beliefs in straightforward behavior and the ideologies of hacker culture makes participation in the informal gateway activities for computer science less likely. Thus, urban youth who believe in tests of physical prowess, basketball-court egalitarianism, and a certain paradigm of conventional black masculinity that is coded as no-nonsense or—as Fox Harrell says—“solid” might be less likely to take part in forms of “geeking out” that involve subverting a given set of rules. Similarly, Tracy Fullerton has argued that teenagers from families unfamiliar with the norms of higher education may also be hobbled by their reluctance to “strategize” more opportunistically about college admissions. Fullerton’s game "Pathfinder" is intended to help such students learn to game the system by literally learning to play a game about how listing the right kinds of high-status courses and extracurricular activities will gain them social capital with colleges.

The kind of learning associated with cheating, modding, and hacking also raises provocative questions about the set expectations of everyday life for those in more privileged groups. For example, my own son, who was nine years old at the time that I began writing this book, was playing a sequel to "The Sims," which is a video game where players build houses, spend money, and do a number of everyday adult activities in the pursuit of wealth and happiness. Some versions of the game also give players god-like powers to design an entire world in which points are accrued based on the sustainable homeostasis of their model of utopia and the interactions between competing interests that the player has constructed. "The Sims" is a computer simulation that is often praised by educators who promote digital media and learning because the game requires a lot of problem solving. Although created by the famed libertarian Will Wright, "The Sims" launched a franchise in which players can participate in the social engineering of everything from the acquisition of consumer goods in an individual household to building large-scale urban infrastructures that include power grids and transportation policies intended to support the health of complex, globalized economies.

My son’s digital media and learning experience with "The Sims" included several hours spent carefully choosing furnishings for the house of his virtual family. He didn’t splurge on the most lavish electronics or opulent furniture for his computer-generated dwelling; his aim was to combine his aesthetic sensibilities about what would constitute good taste in a home while accommodating all the various needs of his simulated family (who were named after the members of his real family and shared many of their characteristics). However, he was soon disappointed to realize that he didn’t have enough money in the world of the simulation to purchase the house that he had worked so hard to prepare for occupancy. Quickly he demanded to be allowed online to see if there were any cheat codes to remedy the situation.

Even from his nine-year-old perspective, cheating in the simulation was a rational response to the constraints arbitrarily introduced into the game to create want and competition. There was no scarcity of material resources dictating the situation he found himself in. Given the nature of the means of production in bits and bytes, a well-appointed virtual house costs no more in statistically significant terms to the company that publishes the game, Electronic Arts, than an impoverished dwelling. And he had already paid for the game with a birthday gift card that represented real currency and had contributed several hours worth of his labor in creating his ideal decorative scheme. Besides, he argued that his aims were altruistic in that he had planned to gift me the house to show me all the pleasures that could be had in a "Sims" environment, as a kind of vacation home that could serve as a refuge from the real-life drudgery that he saw was associated with a real-life home and its domestic chores. Although the game emulates some of the unfairness of the real-life market system that excludes many from home ownership by creating barriers, the game’s barriers could be easily overcome, unlike many barriers to social advancement—those of class, education, race, ethnicity, and language—in the real world. As game scholars McKenzie Wark and Jane McGonigal have both observed, games are appealing because the rules seem more fair and more oriented toward rewarding effort and problem-solving than the rules of the much more dysfunctional real world governed by consumer capitalism (Wark’s critique) and largely disconnected from concerns for the personal happiness of individuals (McGonigal’s area of concern).

However, Gee would later argue in "The Anti-Education Era" that gamesmanship that enables universal access and personal privilege may actually be extremely counterproductive. Hacks that “make the game easier or advantage the player” can “undermine the game’s design and even ruin the game by making it too easy.” Furthermore, “perfecting the human urge to optimize” can go too far and lead to fatal consequences on a planet where resources can be exhausted too quickly and weaknesses can be exploited too frequently. Furthermore, Gee warns that educational systems that focus on individual optimization create cultures of “impoverished humans” in which learners never “confront challenge and frustration,” “acquire new styles of learning,” or “face failure squarely.”

In understanding the GMA segment on “High-Tech Cheating,” it is worth noting that Cohen’s “The Ethicist” column often deals with the ambiguities of everyday situations in which fairness, access to information, reciprocity, and obligation to abide by common social codes come into play, so the justifications that my nine-year-old provided might be familiar to him—even if cheating in a Sims-style game is clearly more of a victimless crime than cheating on an examination. For example, a classic case addressed in Cohen’s column involves whether or not it is ethical to move up to more expensive seats that are unoccupied at a sporting event. In that case, decision-making to maximize resources and individual happiness is at odds with the variable reward structures of ticket pricing. As the one talking head introducing moral ambiguity on the show, Cohen was drawing viewers’ attention to more subtle aspects of the cheating videos than the obvious fact that they condone cheating on examinations. If Cohen were speaking as a rhetorician rather than as a newspaper columnist, he might ask: What perfectly legitimate rhetorics do such cheating videos promulgate, and what conventions about instruction do they promote?

The "Good Morning America" story about the cheating videos was introduced by anchor Chris Cuomo, who explicitly connected the story to the Internet’s generally understood role in online teaching and learning.

All of you know that the Internet has revolutionized the way that our kids learn. Of course you do. But did you know that it has also revolutionized the way that they cheat? Listen to this: 40 percent of American students admit to cheating. And Internet plagiarism, that used to be the big worry, but now there is a bigger concern: online instructional videos, kids literally teaching other kids tricks to help them cheat at school.

After this reminder about the revolutionary character of the Internet, correspondent Juju Chang then picked up the story and compared knowledge acquisition in online communities to an “echo chamber” of received opinion.

And you can chalk this up just when you think you’ve seen it all online. Well, you know, cheating, of course, is nothing new, but what’s alarming is that it is being celebrated in the echo chamber of the Internet. That’s when it’s time for adults to take a second look.

Later, contributor Ann Pleshette Murray, who was billed as an expert on parenting, complained lamely about how the “YouTube craze” encourages “shameless” behavior such as “boasting.” Both YouTube cheaters that the episode profiled, Kiki Kho and Nate Igor Smith, blamed the viewer. For example, Kiki argued that she never “promoted cheating” and that it would be “the viewer’s fault” if her advice was heeded. Rather than focus on why such vulnerabilities exist in a teach-to-the-test educational system, the segment closes with banal moralizing about how such cheaters only cheat themselves.

What’s striking about the ABC coverage is that it lacked any of the criticism of the educational status quo that became so central for a number of readers of the earlier Chronicle of Higher Education story—those who were asking as educators either (1) what’s wrong with the higher education system that students can subvert conventional tests so easily, or (2) what’s right with YouTube culture that encourages participation, creativity, institutional subversion, and satire. For example, DrFunZ puts the blame squarely on his own professorial class:

Students somehow believe that if they have the equations in front of them, or a bunch of facts to cheat from they will benefit during an exam. NOW, where exactly did they get that idea? They got that idea by realizing that it sometimes works!! Why does it work? It works when we in the professorate write exams that test simple facts or the ability to just plug in numbers, we make it easy to cheat. But, when we make exams that are more challenging, ones that required students to apply equations/ facts to completely novel solutions, then this method of cheating would go extinct.

Most of the physical science profs in our department allow the students to use texts, notes and internet when doing exams. The exams are brain-burners—all application to novel problems. Rarely will more than a few get an A on the exams. Our mindset is like this: Just knowing formulas and facts is comparable to tossing your legs out the bed in the morning. Yes, you are up and awake, but you haven’t really done anything yet. The real work is yet to come.

In contrast, another person posting comments, Allison, identifies herself as “an arts and crafts person” and expresses her admiration for “the quality—good pictures/right level of detail—of this training video.” Commentator Peter Naegele complains that this kind of story about YouTube could easily be absorbed into “‘technology is making students lazy and stupid’ propaganda” despite the fact that the lazy ones might be the teachers rather than the students.

Many of the educators argued that in the age of ubiquitous computing, depriving students of reference materials does little to test them on their ability to apply concepts in real-world situations. Several provided testimonials to the value of allowing them to bring their own study aids, such as note cards, to examinations. As Herb puts it,

The creation of cheat notes is actually an aid to remembering stuff in the first place. You’ve had to read, organize, re-type, and have things easily at hand when needed. Once you’ve done all that, the likelihood is that you’ll remember stuff without the notes anyway. Tell your students they can bring to the test all the information they can put onto an index card, and watch them learn more.

This attitude reflects current research on so-called distributed cognition and how external markers can help humans to problem solve by both making solutions clearer and freeing up working memory that would otherwise be tied up in reciting basic reminders. Many of those commenting on the article also argued that secrecy did little to promote learning, a philosophy shared by Benjamin Bratton, head of the Center for Design and Geopolitics, who actually hands out the full text of his final examination on the first day of class so that students know exactly what they will be tested on.

At least one person who posted a response to the Chronicle article complained that this shortsighted behavior on the part of both test-takers and test-givers could be traced to the emphasis on multiple-choice testing during the administration of George W. Bush after the passage of the No Child Left Behind law in Congress. At the time that the cheating videos were being debated in the Chronicle of Higher Education, academics focusing on research in higher education were also discussing the so-called Spellings Report from the then-Secretary of Education Margaret Spellings, who argued for more multiple-choice testing examinations in college to create more quantifiable measures of success.

Pressures to proceduralize education are nothing new. I well remember the drudgery of being an elementary school student forced to work methodically through the color-coded Science Research Associates (SRA) reading cards and their multiple-choice tests. Such cards had been adopted by school districts eager for standardized instruction and measurable results. I recall how my teachers would praise the SRA system as a form of instructional technology that would supposedly prevent quick readers like me from becoming bored by group instruction with slower readers and how the colorful cards and pride of progress were supposed to keep me more motivated than would more amorphous human-delivered lessons without clear benchmarks. The Science Research Associates company was founded in 1938 and acquired by IBM in 1965; it now offers online tutorials as part of the McGraw Hill textbook-publishing empire.

Access to specific types of computational media, now possible in the era of personal and mobile computing, certainly changes the student-teacher dynamic significantly, and the equation of interactivity with engagement is driving educational policy in new ways. In particular, an entire academic discipline devoted to assessing student engagement has blossomed in recent years, as university administrators struggle to recruit and retain students who are tempted to check out for more fulfilling life experiences online. Reports from the National Survey of Student Engagement, the Community College Survey of Student Engagement, and the High School Survey of Student Engagement explicitly make the issue of student engagement a top priority. Well-intentioned administrators may even construct learning communities that are so good at engaging students that they might have little to do with engaging faculty; such communities function instead as self-contained bubbles devoted exclusively to the jurisdiction of student affairs and residential life. Skeptics might even argue that the student-engagement movement encourages the pursuit of the pleasure principle—instead of actual learning objectives that equip students for demanding professions like engineering, medicine, and law. Certainly, even if this movement exists to bring the dorm room and the classroom closer together, to focus exclusively on engagement risks ignoring the existence of potential conflicts between what students want and what faculty want.

This book explores the assumption that digital media deeply divide students and teachers and that a once covert war between “us” and “them” has turned into an open battle between “our” technologies and “their” technologies. On one side, we—the faculty—seem to control course management systems, online quizzes, wireless clickers, Internet access to PowerPoint slides and podcasts, and plagiarism-detection software. On the student side, they are armed with smart phones, laptops, music players, digital cameras, and social network sites. They seem to be the masters of these ubiquitous computing and recording technologies that can serve as advanced weapons allowing either escape to virtual or social realities far away from the lecture hall or—should they choose to document and broadcast the foibles of their faculty—exposure of that lecture hall to the outside world.

Each side is not really fighting the other, I argue, because both appear to be conducting an incredibly destructive war on learning itself by emphasizing competition and conflict rather than cooperation. I see problems both with using technologies to command and control young people into submission and with the utopian claims of advocates for DIY education, or “unschooling,” who embrace a libertarian politics of each-one-for-himself or herself pedagogy and who, in the interest of promoting totally autonomous learning in individual private homes, seek to defund public institutions devoted to traditional learning collectives. Effective educators should be noncombatants, I am claiming, neither champions of the reactionary past nor of the radical future. In making the argument for becoming a conscientious objector in this war on learning, I am focusing on the present moment.

Both sides in the war on learning are also promoting a particular causal argument about technology of which I am deeply suspicious. Both groups believe that the present rupture between student and professor is caused by the advent of a unique digital generation that is assumed to be quite technically proficient at navigating computational media without formal instruction and that is likely to prefer digital activities to the reading of print texts. I’ve been a public opponent of casting students too easily as “digital natives” for a number of reasons. Of course, anthropology and sociology already supply a host of arguments against assuming preconceived ideas about what it means to be a native when studying group behavior.

I am particularly suspicious of this type of language about so-called digital natives because it could naturalize cultural practices, further a colonial othering of the young, and oversimplify complicated questions about membership in a group. Furthermore, as someone who has been involved with digital literacy (and now digital fluency) for most of my academic career, I have seen firsthand how many students have serious problems with writing computer programs and how difficult it can be to establish priorities among educators—particularly educators from different disciplines or research tracks—when diverse populations of learners need to be served.

In many ways, as the product of a time when the BASIC computer language was widely taught in schools, it could be said that I am much more digitally literate than my own students. Today there are many competing computer languages tailored for the K–12 environment, and programs are launched and dropped depending on the momentary enthusiasm of individual teachers. Thanks to after-school or summer programs, some students from more affluent schools or “geeky” backgrounds may be exposed to creating code in Scratch, Alice, Scheme, LSL, or ActionScript, but at other schools without equipment for hands-on work or teachers with enthusiasm and expertise, digital literacy often means learning to make tedious PowerPoint presentations or create inane iMovies rather than ever learning anything about writing code and authoring programs that can execute commands successfully without bugs. Even though relatively accessible computer languages like Processing have large user bases that create huge libraries of code that can run robots, remix video and music, and create beautiful animations and data visualizations, many schools are actually cut off from access to such adult communities of online learners because of parent hysteria about Internet predators, cyberbullies, and “time wasting.”

In the book "Born Digital," Harvard Law School professors John Palfrey and Urs Gasser attempt to present a balanced approach to a wide spectrum of online learning venues. Overall, they make a positive effort to engage both educators and students in the conversation by including both costs and benefits of new technologies, addressing both young and old, and getting beyond hype about gadgetry and youth rebellion to focus on the digital dossiers being created on all citizens. Thus Palfrey and Gasser attempt to move the focus from broad generalizations about kids today to the more subtle ambiguities involved in topics such as privacy and consent. Despite my respect for Palfrey as a colleague in the field of digital media and learning, I think he might still rely on four potentially destructive cultural clichés about how “they” are different from “us”: (1) “They all have access to networked digital technologies. And they all have the skills to use these technologies”; (2) “And they’re connected to one another by a common culture”; (3) “They are joined by a set of common practices”; and (4) “Digital natives can learn to use software in a snap.” Although the rise of mobile computing on cell phones has enhanced the technological abilities of many youth from low-income homes who grew up without access to desktop computing, the ability to tinker meaningfully becomes increasingly difficult with such black-boxed devices that rely on business models that perpetuate a push toward miniaturized components, planned obsolescence, proprietary code, and noninterchangeable parts. I might say to Palfrey that there are huge curricular costs to assuming knowledge transfer without obstacles, and that the term digital natives has been widely adopted by news outlets relatively uncritically, even as researchers point to how poorly digital natives may score on tests of basic digital skills, many of which depend on an ability to read fine print rather than respond to showy technical wizardry.

Much of the argument for the existence of a digital generation was actually made many years earlier by those who commented on the rise of so-called “television children” who had supposedly become dependent on an earlier kind of electronic screen. Such young people could no longer respond to traditional learning delivery systems, Marshall McLuhan insisted, and would be more likely to thrive if they were taught by modern closed-circuit televisions with specialized programming.

Our entire educational system is reactionary, oriented to past values and past technologies, and will likely continue so until the old generation relinquishes power. The generation gap is actually a chasm, separating not two age groups but two vastly divergent cultures. I can understand the ferment in our schools, because our educational system is totally rearview mirror. It's a dying and outdated system founded on literate values and fragmented and classified data totally unsuited to the needs of the first television generation.

McLuhan’s assertions about how to address the needs of a new generation might have seemed highly logical in the context of this 1969 Playboy interview, since a body of work from a number of different researchers who were exploring both the dangers and possibilities of contemporary media had already accumulated. A 1962 government report listed a large bibliography of studies with titles such as “An Investigation of Closed-Circuit Television for Teaching University Courses,” “The Potentialities of Closed-Circuit Television: Teaching in Colleges and Universities,” and “Closed-Circuit Television as a Medium of Instruction at New York University.”

Researchers examined the more open medium of broadcast television as well, as the presence of “An Experimental Study of College Education Using Broadcast Television” indicates. According to McLuhan and other enthusiasts, younger consumers needed “hot” media that engaged all the senses rather than “cool” media such as print that required pattern recognition and the supply of missing information by the reader.

Some scholars argue that the empirical evidence for the existence of a digital generation is notably absent and that this rhetoric may have a number of other practical consequences beyond the ones that I have outlined. For example, Siva Vaidhyanathan argues in a 2008 essay called “Generational Myth” for the Chronicle of Higher Education that “not all young people are tech-savvy.” In his opening paragraph he notes the dystopian and utopian rhetoric that constitutes the terms of the debate:

Consider all the pundits, professors, and pop critics who have wrung their hands over the inadequacies of the so-called digital generation of young people filling our colleges and jobs. Then consider those commentators who celebrate the creative brilliance of digitally adept youth. To them all, I want to ask: Whom are you talking about? There is no such thing as a “digital generation.”

Vaidhyanathan draws on his personal experiences as a faculty member to point out that each of his classes has “a handful of people with amazing skills and a large number who can’t deal with computers at all,” and quotes my own reservations about digital literacy in the article. He notes that libraries and traditional print culture are thriving (and struggling) among people of all ages. He also makes a broader point that “generations” tend not to function well as descriptive cultural categories to characterize historical periods of change.

In the case of technologies associated with computational media, I think it is quite interesting to contrast older early adopters who experimented with new technologies for teaching and creating media, sometimes decades before these practices become widespread, with younger members of a more unevenly prepared digital generation in need of mentorship. Ironically, many of those early adopters are exiting the classroom as they reach retirement age, so the trend may actually be toward computational ignorance rather than computational enlightenment as time goes on. I also argue that the digital generation functions more as an ideological construct than as a demographic label for a group of subjects who can be studied empirically. When I talk about the myth of the digital generation, which is perpetuated about a supposedly identifiable and technologically empowered digital generation, I mean to use the word “myth” in both senses of the word: as a refuted falsehood and an origin story. Recent research in the social sciences may indicate that there are significant deficits in computational literacy among younger computer users. But a “myth” is also profoundly true, because of its explanatory power.

Promoters of gadget-oriented instructional technology argue that students in the digital generation need to be connected to a technical device in order for there to be any hope of engagement with formal education. Consider the home page of “Engaging Technologies” as an example of how the current instructional technology movement speaks about “educating a new generation.” In a page of links to what seem to be research findings about “Why Use Clicker Technology?,” the company boasts about the benefits of their technology specifically in reaching the young:

Each student in your class has a wireless handheld response pad, or clicker, with which they are able to answer questions. You, the teacher, pose a question verbally or through the computer onto a projector or television screen, and the students respond with their handheld device. Clicker technology, very similar to the familiar feel of a remote control in their hands, is comfortable and fun for the Net Generation. They instinctively know how this “gadget” works, and it makes learning fun. After students respond, you can then see immediately how students answer and if they understand the material being taught. This allows you to remediate if needed or forge on ahead with new material if it is clear that the students are ready for it; clickers provide true data-driven instruction. The ability to pose verbal questions and receive immediate feedback from the eInstruction clicker will also allow you to totally change the dynamics of that usually tiresome lecture time. The classroom suddenly becomes engaging and interactive, and your instructional time becomes much more effective. (Emphases in the original)

Notice not only how engagement and interactivity are praised and conflated, but also how the rhetoric of novelty in consumer electronics and of short attention spans also comes into play.

Despite being busy stage-managing increasingly complex PowerPoint presentations or elaborate clicker quizzes recommended by instructional technologists, professors notice student apathy and preoccupation, and their feelings do get hurt. Bring up the subject of student engagement and digital distraction at any faculty gathering and brace yourself for tales of woe involving laptops, cell phones, and other instruments of pedagogical subversion that seem to further distance instructors from their students. When the New York Times ran a series of articles on the theme “Your Brain on Computers,” public response was particularly strong to a story called “Growing Up Digital, Wired for Distraction.” Some of the teachers in the article had complained that administrators were pandering to shorter attention spans. “When rock ’n’ roll came about, we didn’t start using it in classrooms like we’re doing with technology,” a particularly forlorn Latin teacher lamented. Unlike the predator panic or the cyberbullying panic of recent years, where the older generation could step in and protect the young from their own computers, the distraction panic posits that there is an unbridgeable generational divide that creates a potentially disastrous rupture in norms of the classroom and, inevitably, in the fabric of society as well. Because of the inability of authority figures to contain the dysfunction, distraction seems to be a problem with no foreseeable solution.

In contrast, some scholars have argued that this inability to focus might actually be preservative in an age of rapid-fire distributed stimuli. For example, Duke professor Cathy Davidson has defended multitasking as a way of protecting against the dangers of “attention blindness” in which sustained attention with a narrow focus might actually cause people to miss crucial information in the larger scene. In "Now You See It: How the Brain Science of Attention Will Transform the Way We Live, Work, and Learn," Davidson asserts that we should learn the central lesson of a famous cognitive experiment in which research subjects told to count the passes of a basketball miss the fact that a person dressed in a gorilla suit enters and exits the center of the basketball court scene that they are intently watching. According to Davidson, “our digital age demands a different form of attention than we’ve needed before” now that our “primary information source is Google, where a search for information about ‘attention’ turns up 371 million entries, and there’s no librarian in sight.”

Excerpted from “The War on Learning: Gaining Ground in the Digital University” by Elizabeth Losh. Copyright © 2014 by Elizabeth Losh. Reprinted by arrangement with the MIT Press. All rights reserved.

Shares