Imagine this scenario: Economic and military pressures drive the rapid development of artificial intelligence (AI) technology. Despite engineers’ good intentions, machines advance toward a fully autonomous existence. Panicked, humans try to reverse course, but it’s too late. A military drone makes a conscious decision to kill on its own terms. AI is now self-aware, and continues to learn and evolve at an unprecedented rate. Human decisions are no longer needed. Humans, in fact, are irrelevant.

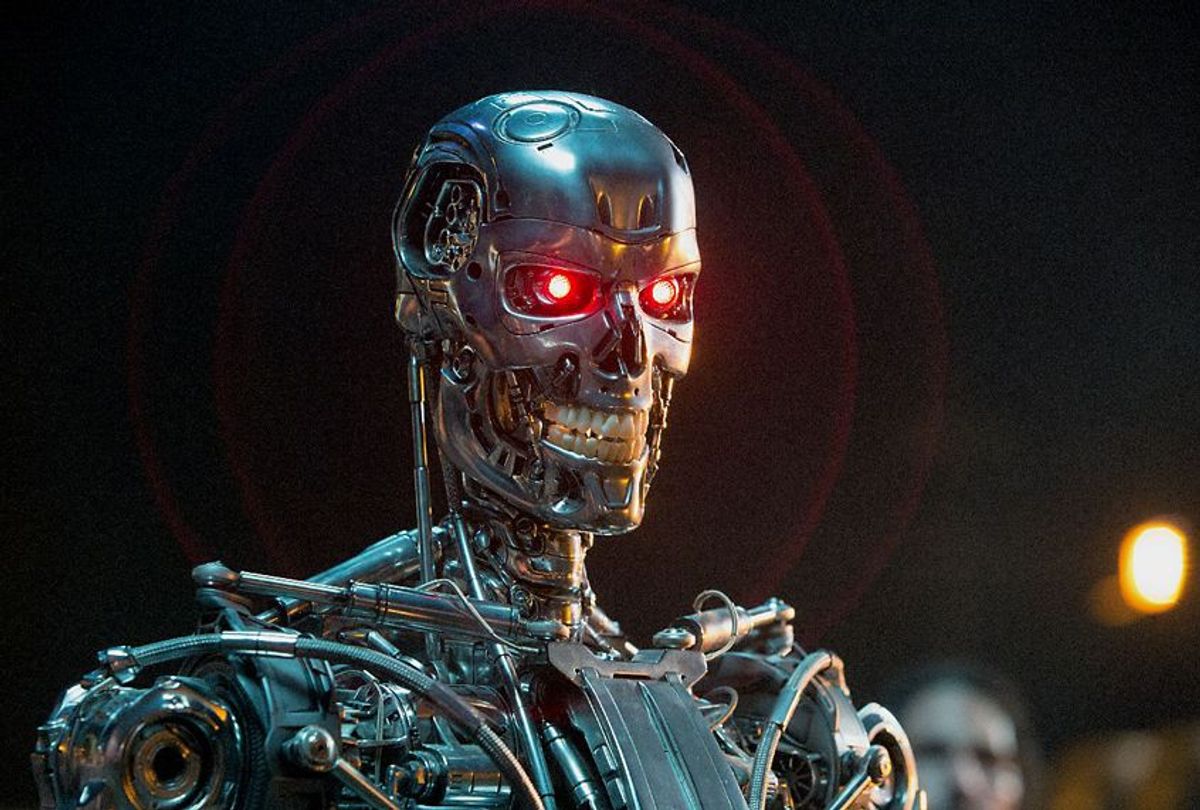

While this might sound like Skynet, the evil AI system from the "Terminator" franchise, this is a real-world scenario that some researchers believe could unfold if we’re not careful. Legendary physicist Stephen Hawking has warned against the dangers of AI, hinting that it could take off on its own and, if it did, “Humans, who are limited by slow biological evolution, couldn't compete, and would be superseded."

In August, Tesla and SpaceX CEO Elon Musk issued a stark warning via Twitter: "If you're not concerned about AI safety, you should be." Musk has been a vocal critic of unregulated AI, fearful of its unfettered development. He too envisions a future where Skynet could materialize if we don’t take precautions.

But those who are actually involved in the development and programming of intelligent behavior by machines say artificial intelligence taking over the world is unlikely -- although it could develop some level of awareness.

“Present fears of Skynet are dramatically overblown,” Adam Coates, an operating partner at Khosla Ventures, told me. “It’s based on too many sci-fi films and charlatan attention-grabbing.”

Ben Brown, a software developer and co-founder of Howdy.AI, agreed with Coates. “The idea that there’s going to be a machine with some malicious intent is crazy,” he said. “People like to imagine the evil robot scenario, or even the good robot scenario, because AI brings that kind of image to mind.”

As Brown explained, people are not aggressively working on talking robots or androids -- or any type of machines or systems that have conscious awareness. People are working on discrete pieces of technology, like machine learning or natural language processing algorithms, which do not combine to make a thinking machine.

The AI tools we have today, Coates said, are incredibly powerful and will change the world. Even so, they are heavily dependent on data and human engineering to solve tasks and are nowhere near as versatile as humans. “My 2-year-old son can learn new tasks with fewer examples, has better language skills than any chatbot, and intuits ways to push his parents' buttons with ease,” Coates said. Although he’s confident that AI research will be able to make progress on individual abilities, he said it’s impossible to say how we will bring them all together into one framework that can match a toddler. “To construct consciousness similar to an adult brain, which is still poorly understood, is even more difficult.”

Consciousness remains a neurological and philosophical phenomenon. Scientists still haven’t pinpointed the actual brain processes that make up awareness, and philosophers have not reached a consensus on the nature of this baffling state. We do know it’s a part of what allows us to exist and to be aware of not only our surroundings, but also what lies inside of ourselves. To intentionally recreate consciousness or any level of self-awareness and program machines to think on their own is currently impossible. They may be able to learn and evolve in their ability to provide educated responses, but that process still requires human programming.

“Contemporary AI is like statistics applied to big data sets to make predictions,” said Brown. “It only does what we tell it to do and the predictions are only used in the ways that we apply those predictions.”

Unlike Brown, Martin Ford, futurist and author of "Rise of the Robots," said although AI is specialized and can only do specific, narrow things, it does so with proficiency that outmatches most humans. He gave AlphaGo, a project created by Google’s DeepMind division in London, as an example. The objective was to create a computer system that could win at the ancient game of Go, but the system surpassed all expectations. “AlphaGo taught itself to play the game by playing thousands of practice games against itself,” said Ford. “Eventually it was able to defeat the very best human players in the world.”

He doesn’t think the threat of conscious machines is imminent, but said we shouldn’t dismiss our fears. “The brain is basically a biological machine, so I see no reason a competing intelligence can't be created in some other medium,” he said.

Joanna Bryson, a computer scientist and researcher at the University of Bath in England, said, “If it occurs at all, Skynet will still have human components.” While she doesn’t think we’ll ever see humanoids like Ava from 'Ex Machina," she does see hope for "Blade Runner’s" Rachael, a replicant with implanted human memories. “We could see Rachael, but only if she's a biological clone, rather than mechanically engineered one,” Bryson mentioned.

Alex Champandard, co-founder of creative.ai, sees AI materializing in much less flamboyant ways than Hollywood portrays in its films. “Trucks will slowly be automated, starting with partial automation, and large numbers of people will have their livelihood changed or will have to change jobs,” he told me. For example, those employed in retail may see a reduction in staff because some parts of their jobs are replaced with machine learning.

The greatest concern among those working in the field is not a Skynet scenario or AI becoming superior to humans. It’s the threat of scale in the context of automation. “The real scary thing, in my mind, is the application of technology at the scale that we’re seeing now has never before happened in human history,” said Brown. He said it’s not necessarily that the algorithm might go crazy. It’s that the algorithm might affect the lives of billions of people. He compared Skynet to Facebook’s newsfeed algorithm, and said these types of algorithms are not fully understood or controlled.

“Facebook runs on artificial intelligence, but 70 percent of FB engineers who use #AI aren’t experts,” Ford recently tweeted, citing an article that referred to AI algorithms as “inherently black boxes.”

Today's machine learning technologies, which make up the bulk of these so-called black boxes, can be used in fraud, cyberattacks, military applications, surveillance, and countless other areas. There is also a serious potential for forms of bias and prejudice to get calcified into products in ways we don't initially expect.

“We need to push forward, and we need to be clear-eyed about the risks. Worrying about Skynet makes disaster more likely rather than less likely,” Coates warned.

Shares