I know I am not alone when I say I think of Google as my second brain. It’s there when I want to remember something trivial, like that actor’s name in that movie or the title of that song stuck in my head. It’s there when I’m doing research on a guest or when I’m talking with my production team about an author or a recent article I read.

Need the answer to a question? Just google it. Considering that Google processes 40,000 search queries every second, which translates to 1.2 trillion searches per year worldwide — I googled to get that stat, by the way — it’s safe to say that nearly everyone with an internet connection or a smartphone has adopted search engines as their backup brain.

Empowering, right?

But what if using this backup brain is backfiring on us? What if the tools we use to retrieve mundane pieces of information are instead delivering oppressive ideas and hateful ideologies — even swaying elections?

When Dr. Safiya Umoja Noble was in Library Information School she began to notice something that made her extremely uncomfortable: Everyone used Google like it was the new public library.

Noble had worked in marketing for 15 years before returning to grad school to study library science, and had always seen Google for what it really is: an advertising platform. And we, the searchers, aren’t their customers — advertisers are.

“So you have this combination of paying to optimize content, paying to make content visible, and then people clicking on that content — which signals it's credible or viable,” Noble told me in our interview for "Inflection Point."

What does this mean for the rest of us?

“Certain kinds of industries or ideologies can wholesale take over keywords and identities and communities,” she said.

That’s right: the information you see in those search results are heavily manipulated through a complicated digital dance, also known as an algorithm.

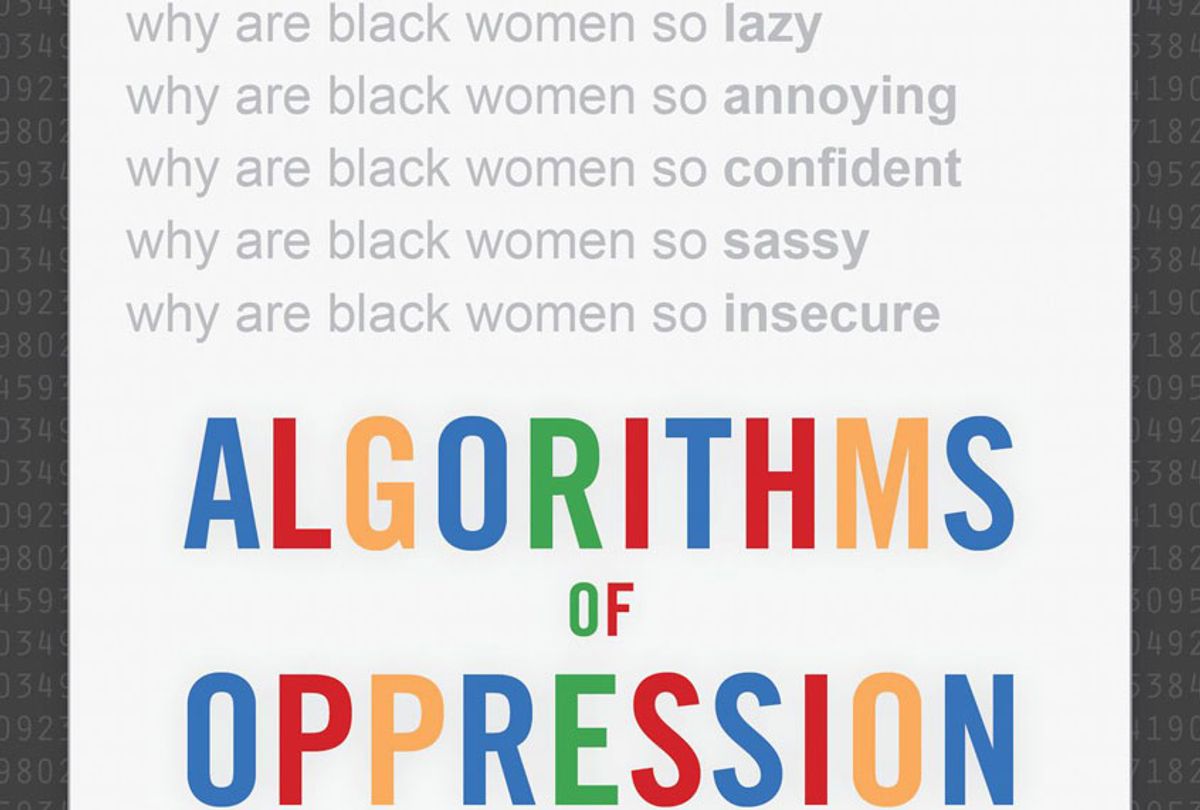

One day a colleague of Noble's, Dr. Andre Brock of Georgia Tech, suggested she google "black girls." When she did, she saw that the top search results were images that perpetuated racial stereotypes, misogyny and exploitation.

“It wasn't just black girls, of course,” she told me. “It was Latina girls, Asian girls, who are also kind of victims of being pornified in Google search results.”

That discovery was the beginning of an investigation that eventually became Safiya’s book, “Algorithms of Oppression: How Search Engines Reinforce Racism.”

We think of the internet as “the great equalizer,” but the algorithms behind it, which are created by humans, serve up content that is sexist, racist and biased.

Listen to my full conversation with Dr. Safiya Noble, researcher and assistant professor of racism, gender and tech at the Annenberg School of Communication at the University of Southern California.

Find more stories of how women rise up on the Inflection Point podcast with Apple Podcasts, RadioPublic, Stitcher and NPROne. And come on over to The Inflection Point Society, our Facebook group of everyday activists who seek to make extraordinary change through small, daily actions.

Shares