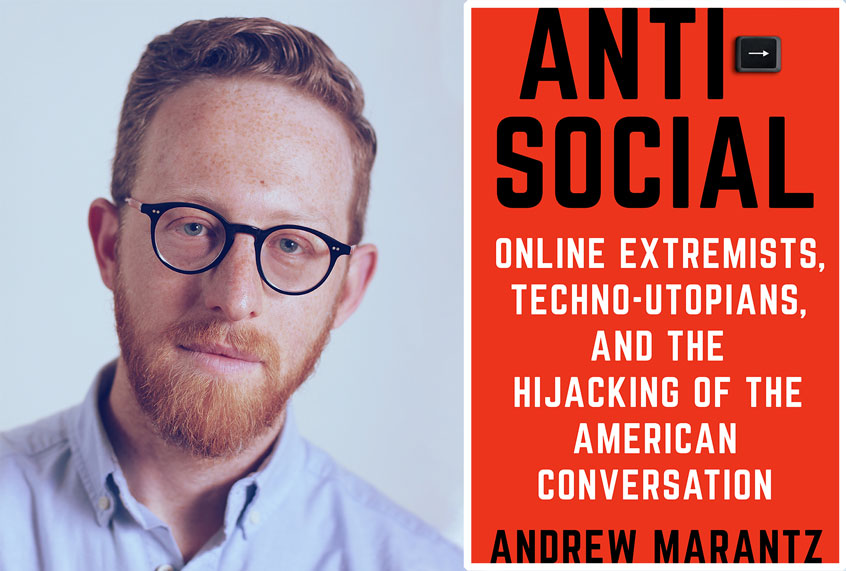

New Yorker writer Andrew Marantz put his sanity on the line in order to take a deep dive into two parallel worlds: the reactionary universe of the alt-right, and the technological triumphalist paradigm of Silicon Valley. The latter is responsible for selling us a utopian vision of the internet that would (supposedly) bind humanity, yet which instead has stoked political division and created, indirectly, the alt-right. In “Antisocial: Online Extremists, Techno-Utopians, and the Hijacking of the American Conversation,” Marantz offers readers an explanation as to how social networks created by idealist techies were co-opted by a rat’s nest of bigots, trolls, and fascists, who exploited the supposed “neutrality” of platforms to spread hate.

Marantz spoke to Salon about his new book and how the internet is changing democracy. This interview has been lightly edited for length and clarity.

This is a book about how the advent of social media really opened up the social and political discourse in this country to the people that Hillary Clinton aptly deemed a “basket full of deplorables.”

I think the deplorable part was more apt than the basket part. But, yeah.

How much do you think tech matters in the rise of not just the alt-right, but Donald Trump?

Tech matters hugely for the rise of Trumpism and for nationalist populism around the world. That’s not to say that it’s the only source of energy for those movements, and it’s also not to say that there aren’t huge benefits to opening up discourse in all kinds of ways.

I don’t want to paint a monochromatic picture or say that tech has only led to deleterious outcomes or antisocial outcomes, but I think for too long there was this tacit assumption among tech bros, and also just among the general public, that on the whole the internet would make the world more open and connected and set us all free and make it more fair and tolerant and generally be pro-social. And I think we’ve seen pretty definitively that that hasn’t entirely been the case.

What I like about your book is you really do talk about some of the historical antecedents to the rise of social media. This is something that I’ve been boring my friends with, pointing out, you know, it’s not like the printing press didn’t lead to violence, too.

Exactly, right. In fact, it led to many of the same kinds of violence, antisemitism, bigotry, and misinformation. None of this is new. It’s just that we’ve kind of forgotten those lessons of history.

As you said, the tech industry was very idealistic, promoting the idea that social media would connect people, would help spread good ideas. The Arab Spring was a big marketing event for social media, even as it was a real thing in the real world. Why do you think there was an unwillingness to look at the possibility that it would also give rise to hate speech, violence, bigotry, things like that?

Part of it is just that it’s uncomfortable and unpleasant to think about bigotry and hate speech. So people would rather not do it.

I’ve faced that even while reporting this book. I like to think that the book is engaging and even darkly funny. But I’ve had people indicate that they are scared to read it, because they would just rather not think about this stuff.

I think that the only way out is through, which is why I used James Baldwin as an epigraph. Nothing can be changed until it’s faced.

I happen to think this stuff isn’t as depressing as people worry it’ll be, but that’s a separate topic. But yeah, it’s not fun to think about.

Also, we tend to get stuck on this dichotomy of optimism versus pessimism, and I think that’s just the wrong way of thinking about these things. A more useful frame than either optimism or pessimism is what I refer to as contingency.

Tell me more about that.

I borrowed that concept from the philosopher Richard Rorty, who wrote one of my favorite books called “Contingency, Irony, and Solidarity.” And contingency, boiling it down a lot, just means we don’t know what’s going to happen. We can’t have a teleological view of the future. We can’t assume that things are pre-ordained to happen in a certain way. We have to look at the facts as they are and make an informed judgment about whether we’re going to inevitably have a more perfect union and inevitably make progress.

Often we just assume that those things will just happen inevitably. A more contingent view of history would suggest that they don’t happen automatically, and they don’t happen at all if you don’t work for them to happen.

In this book, you often explore your own emotions about institutions versus the “disruption” of the tech industry, and express some longing I think for some of the gatekeeping power that old institutions had before the time of social media. Having gone through the entire process of writing and publishing and now marketing this book, where do you fall on that scale right now?

I see it as a trade-off. By no means do I want to pretend that the whole gatekeeper model was perfect or even very good. It was flawed in all kinds of ways. It was run by upper middle-class white men who didn’t have a very nuanced view of the country for the most part. There were all kinds of problems with corporate control. Nothing about our past should be construed as a golden age.

It’s just that when you tear down all those structures, and don’t even try to even pretend to think about what’s going to replace them, or you replace them with something worse, then the problems don’t get better. They get worse.

Before even there was a term, the alt-right, feminists and social justice activists were observing that social media was allowing uglier forms of bigotry to flourish. A lot of the blame was kind of put on the white maleness of the tech industry, that there was a blindness of the people running it. How much do you give credence to that?

I definitely give credence to it. I think the basic critique that you can’t really readily empathize with people if you don’t have representation of all people within your company, I think is an apt critique.

But I don’t think that it would solve everything if the tech companies had diversity and inclusion statistics that accurately reflected the country. I think that would help, but I think the problems are deeper and more systemic than that.

The discourse around this particular problem has often been one of ignorance, right? There’s a sense that a lot of the white male leaders of the tech industry have progressive values.

Some of them.

On the other hand, you ran into Peter Thiel, at the DeploraBall. Do you think that’s an element of the problem right now? That we just have bad actors in tech leadership?

Well, my critique of Peter Thiel in the book was not based on his support for Trump, although I find his support for Trump extremely regrettable. My critique of him was based on the fact that I tried to engage him in a dialogue about the fundamental problems at Facebook, which arguably could not have become as successful as it is without his early investment. And instead of answering my critiques, he just left and didn’t respond to me at all. And didn’t respond to followup questions during the fact checking process.

So my critique of him is really more substantive. I mean there are lots of Trump supporters in the country, Peter Thiel being one of them. I can debate with them or decry their views, but really my issues with Thiel are that he got rich off of Facebook. I’m just curious whether he knew that there would be all these negative impacts on our society, in addition to the positive ones. And if he did know, why he didn’t do more to stop it?

Shifting over to the systematic issue, in this book you analyzed the concept of virality, which you frame in a Darwinian sense. Could you explain that more for some of the readers of Salon?

There’s this concept of the old gatekeeper outlets, specifically the New York Times, that they give you all the news that’s “fit to print”. It struck me that that word, “fit”, has the unintended double meaning. They mean “fit” as in what’s appropriate.

But social media algorithms, for the most part, are not concerned with what’s appropriate. They’re concerned with what gets engagement. Engagement can be anything. It can be positive engagement like likes, and it can also be negative engagement like hate reads or shares for the reason of saying, take a look at this asshole. Algorithms are neutral with regards to that. Their job is to keep you hooked and keep your brainstem fired up so that you stay on the platform.

That’s what leads to this Darwinian outcome. A Darwinian system, like nature, doesn’t care whether you’re a surviving by being nice or surviving by being red in tooth and claw, as they say. All that matters is whether you pass on your genes.

The same is true in the world of memes, which is a term coined by a very prominent Darwinian biologist to explain how ideas struggle for dominance. These systems create a world in which the way that ideas struggle for dominance is pretty red in tooth and claw.

On Twitter, if you write a “look at this asshole”, you get yelled at by people saying don’t give trolls attention, that it just raises their profile. The counter argument to that is, if we don’t look at it, we can’t criticize it. How do you kind of feel about the debate? Is there anything that we, as individuals, can do to change the incentive structures of the internet?

To take the first part first, there’s no good answer to the question of how to respond to noxious trolls, because if you give them attention, even negative attention, then you’re feeding them oxygen. If you ignore them, then you are risking seeming complicit. So that’s why that’s such a vexing problem because there really is no good individual answer to it.

Systemically, there are ways to tweak the systems to diminish that kind of stuff, but as a person, I don’t really see an easy way out.

I think the flippant answer that a lot of people land on is to just say, I’m just going to log off, which is really good for your mental health. And I recommend it for a lot of people in a lot of circumstances. But you don’t get to log off of the country, right? So these systems can still be affecting who your president is, and whether you get to live in a country that has environmental regulations and all the rest of it. So you can’t opt out of that.

So let’s talk about some of those trolls, because they are admittedly fun to talk about. You spend a lot of time in this book engaging them as personalities. You went to the DeploraBall. The right wing trolls, they really think of themselves as rebels. It’s both their identity and their justification. But I couldn’t help but think that their ideologies are just old-fashioned racism and sexism, their behavior is like out of a bad beer commercial and their jokes reminded me of the lame jokes from some high school locker room. How on earth are they holding themselves out as rebels?

Yeah, they’re really bad at it. Yet it’s still really effective. It reminds me of the kind of comedian who is not a good comedian but just gets laughs anyway because he or she — let’s be real, it’s usually a he — will just say whatever makes the audience uncomfortable and get those kind of uncomfortable laughs from the crowd. It’s just cheap.

A lot of this rebel attitude is so central to a lot of these people that “The Rebel” is a name of one of their big media companies. This woman, Faith Goldy, got fired from The Rebel. The line she crossed was going on a Nazi podcast during the march in Charlottesville. When she got fired for that, there were commenters online who were saying to the owners of that media company, “You guys should change your names from The Rebel Media to The Conformist Media.”

And it’s like, so being a Nazi is being a rebel? That’s just a really, really shitty and unoriginal way to be rebellious, in addition to being terrifying and disgusting and all the rest of it.

When I think of the Nazis, I don’t think of people that were really into rebellion as a concept.

[Scoffs] Yeah, free spirits.

One of the interesting paradoxes of the entire alt-right is they often get called contrarian, provocateurs, transgressive, but the values they stand up for don’t remind me of transgression at all. They just remind me of the same old sexism and racism that was socially normative when I was growing up.

Well, they’re transgressive in the sense that they’re saying things that you’re not supposed to say in polite company. That doesn’t make it interesting or cool or original. It does make it transgressive in the sense that you can offend people. You can freak them out. And then, if you created a set of norms within your own little subculture that declares that anybody who ever gets offended or freaked out is therefore triggered and whatever, then okay. By the terms of that self-sustaining logic, you can quote unquote “win”, because you triggered people. But ultimately, you’re not serving any very interesting end other than having a moment of infamy.

There’s a lot of hiding behind the term “free speech”, which is treated as a kind of empty set value, because nobody’s really taking anyone’s free speech away. Why do you think that there’s so much emphasis on topics like free speech and supposed transgression, and very little about the actual content of this “free speech”?

This is part of why I thought it was so important to actually spend hours and months and years of my life hanging out with these people. It wasn’t because I enjoyed it, or because I preferred to get dinner with Mike Cernovich and Jack Posobiec rather than hanging out with my wife and child. It was because the real way to understand how these people think and talk and interact with each other is just to hang around them for long enough that they forget you’re there.

The free speech thing is one example of that. They can talk about this stuff in the abstract and use language that sounds really principled, but when you hang around for long enough it becomes clear that it’s just a tool they’re using. Like anything else, it’s a weapon.

If they can use “free speech” as a talking point to advance their agenda, they’ll use that. If they can use something else, they’ll use something else.

At no point did I want to be so contrary to the contrarians that I became anti-free speech. It’s just that when you see what’s really motivating them, you can see through their game. But that only happens when you put in the time.

Having spent as much time as you did with them, do you have any advice for readers on self care and mental health management when faced with this vile stuff?

I do think it’s appropriate a lot of times to step away from it and log off. Being a keyboard warrior 24 hours a day is not healthy for anyone. But I also wouldn’t want to live in a world where social media were only inhabited by nihilists and trolls and bigots. So I don’t want all good people in the world to permanently abandon all internet spaces. But I do think it’s worth just stepping back and thinking in a slightly broader way about what is all this and what are we trying to accomplish?

The larger frame of the book is about the hijacking of a national conversation. On any given day, you can sit out all of the trending topics and not weigh in and not even know they’re happening and you will be totally fine. The world will go on without you and you won’t really miss anything.

In a larger, more sustained sense, I do think that part of the job of being a fully engaged citizen of a putative democracy is to have a stake in the national conversation. If part of that or a lot of that is happening online, then so be it. But I think it’s just worth doing with as much of a clear head and a sense of purpose as possible.