The first time that I met a blind scientist, I was in an NSF Research Experience for Undergraduates at the University of Delaware, a program geared toward disabled students interested in pursuing STEM research. Until that point, it hadnever occurred to me how science education excludes blind students. My daily experience in classrooms consisted of professors drawing molecules on the board or writing out an equation with the assumption that students in the class could see what was being written, very rarely even stating what they were writing. But what about the blind and low-vision students in the classroom?

According to the National Science Foundation's 2021 report on Women, Minorities, and Persons with Disabilities in STEM, as of 2019, 9.1 percent of those graduating with a doctoral degree reported having a disability, a figure that includes people who are blind or low-vision. Inclusive teaching methods are needed to increase accessibility in STEM.

A recent paper from Baylor University, led by Katelyn Baumer and published in Science Advances, was inspired by exactly this problem. Shaw designed a study to assess whether people could learn to recognize 3-D models, like those often used to teach science, with their mouths instead of with their eyes. "I probably wouldn't be working in this area if it was not for my own child who is visually and hearing impaired and autistic," Shaw stated in an email interview. Having his son be diagnosed with retinoblastoma at such a young age changed Shaw's view of science and encouraged him to increase accessibility for blind or low-vision scientists within his field.

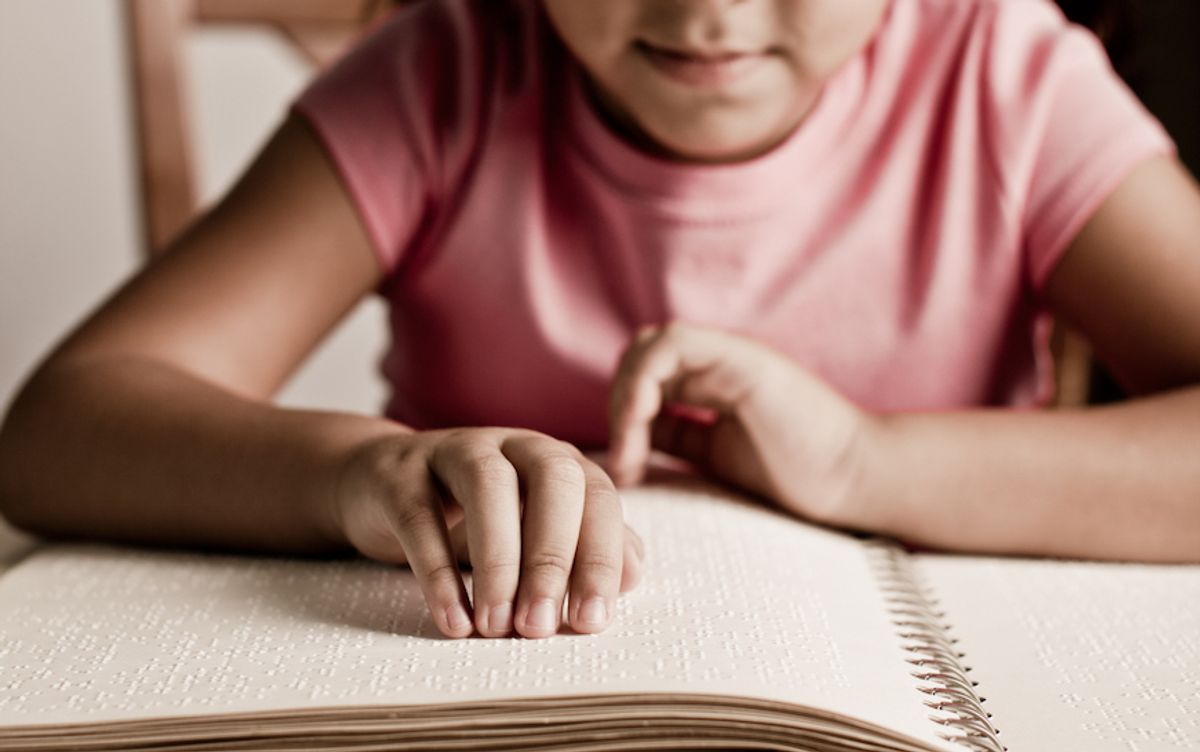

Shaw is certainly not the first person to take advantage of the ability of our mouths and lips to spatially discriminate between items. For example, a Hong Kong student named Tsang Tsz-Kwan has taught herself to read Braille with her lips. Although not a traditional method to learn Braille, this case suggests that the mouth is able to recognize and distinguish patterns.

Shaw's research builds on the fact that brain imaging has revealed that the feeling of touch, called somatosensory input, from our tongues, lips, and teeth converge onto the primary somatosensory cortex to produce an image generated entirely by signals from our mouths. The primary somatosensory cortex is a region in the brain that receives input to produce images.

A 2021 paper in Nature found that when primates showed the same brain circuit activation when grasping objects with their hands and when moving an object with their tongue. This indicates that there may be underlying similarities of physical manipulations of the hand and the mouth, but much remains unknown. Signals from manipulation with either the hands or the mouth are sent to the cerebral cortex, but as Shaw points out "the fine structure of how it is all sorted and processed remains unknown."

Baumer, Shaw, and their colleagues found that there was comparable manual touch recognition with hands to mouth manipulation recognition when using these models. Both college students and grade-school students (4th and 5th grades) participated in the study, with 365 college students and 31 elementary school students represented. The participants were blindfolded and then split into two groups, one assigned to manipulate objects by hand, and one to manipulate the objects with only their mouths. Each participant was given a single model protein to study. They then were asked to identify whether each of a set of eight other protein models matched the original they were given. Of those eight, one was a match.

Shaw expected the results to be similar across the two age groups, as "Oral somatosensory perception is hardwired into us, the tongue develops very early and we likely start doing oral somatosensory perception in utero." The research team saw that both age groups of students were able to successfully distinguish between models, and the accuracy of recalling the structures was higher in people who only assessed the models through oral manipulation in about 41 percent of participants.

Part of the design process was to have the models be portable, convenient and affordable, since they would eventually have to be produced in mass quantities. As noted by Shaw, often biochemistry textbooks have over a thousand illustrations, which further emphasizes the need of the models to be small and cheap. The first person Shaw gave the models to was Kate Fraser, a science teacher at the Perkins School for the Blind in Massachusetts, which also was Helen Keller's alma mater. He chose the school because they offered significant support by personally traveling to his family's apartment and offering interventional help after Shaw's son had his eye removed.

Although this study did not involve blind or low-vision students, it sets the basis for expanding into them next. These models have shown comparable results to manual manipulation and may offer a way to have science become more accessible, which is the ultimate goal. By increasing the number of disabled scientists, STEM will benefit from the diverse perspectives which will ultimately lead to better science.![]()

Shares