No one wants to be falsely accused of saying or doing something that will destroy their reputation. Even more nightmarish is a scenario where, despite being innocent, the fabricated "evidence" against a person is so convincing that they are unable to save themselves. Yet thanks to a rapidly advancing type of artificial intelligence (AI) known as "deepfake" technology, our near-future society will be one where everyone is at great risk of having exactly that nightmare come true.

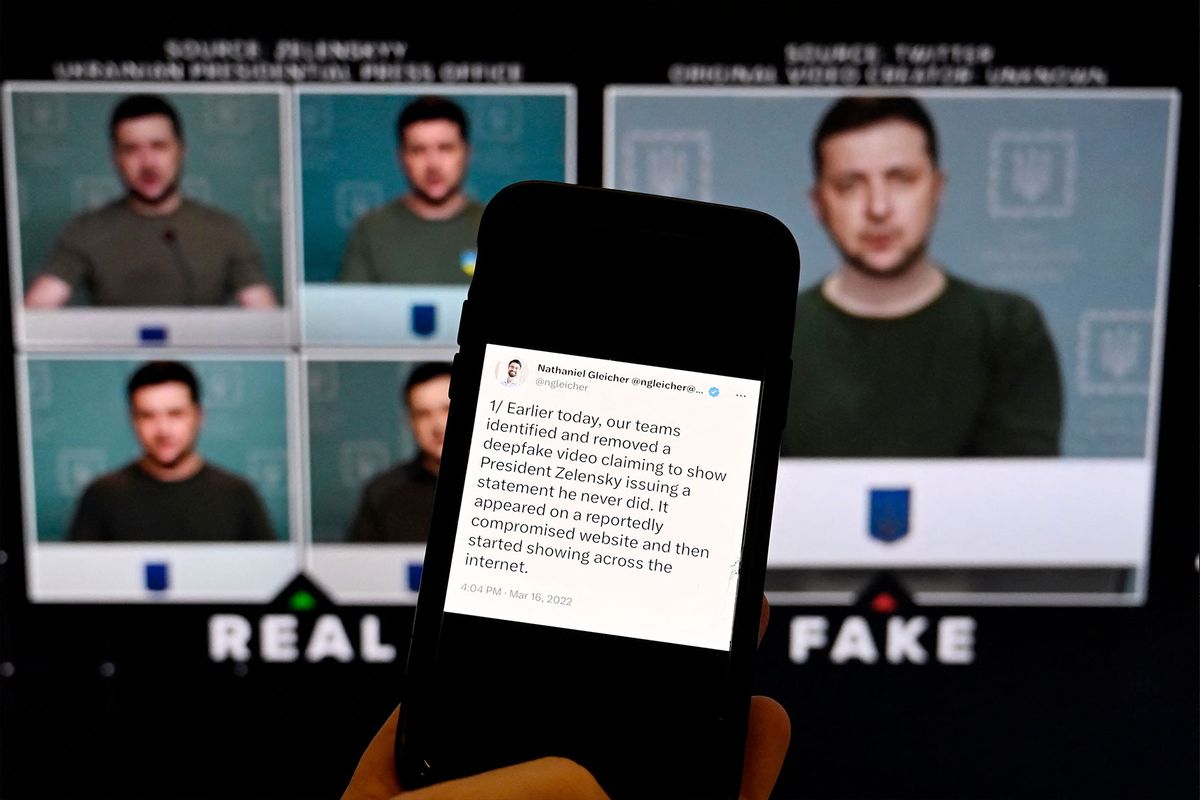

Deepfakes — or videos that have been altered to make a person's face or body appear to do something they did not in fact do — are increasingly used to spread misinformation and smear their targets. Political, religious and business leaders are already expressing alarm by the viral spread of deepfakes that maligned prominent figures like former US President Donald Trump, Pope Francis and Twitter CEO Elon Musk. Perhaps most ominously, a deepfake of Ukrainian President Volodomyr Zelenskyy attempted to dupe Ukrainians into believing their military had surrendered to Russia.

Yet deepfakes are no longer so technologically sophisticated that they require the efforts of intelligence agencies or Hollywood digital artists to produce. Indeed, the tools that one needs to create a deepfake are so easy to learn such that even a marginally computer-savvy person can produce a relatively convincing one using off-the-shelf tools. That means that even ordinary citizens can also be the subjects of deepfakers' malice, as I learned the hard way on Feb. 28th when "Dilbert" cartoonist Scott Adams tweeted an anonymously-created deepfake of me.

"One particularly disturbing example of malicious use of deepfakes is revenge pornography."

Although Twitter eventually took down the deepfake, many people who saw it before that happened told me they had no clue it had been altered. Even some of my closest friends initially thought the video was authentic. The video contained footage of my face and voice that had been disturbingly altered to depict me promoting the great replacement theory, an antisemitic conspiracy theory which holds among other things that Jews only live among other Jews while aiming to destroy the white race through integration. More than 50,000 of Scott Adams' followers saw the clip before it was taken down.

If current trends continue, many others will soon have experiences similar to mine.

How did deepfake technology become so accessible, and so easy to use, that it would usher in a new political and social crisis? To answer this, Salon spoke by email with Dr. Siwei Lyu, the Empire Innovation Professor of Computer Science and Engineering at the University at Buffalo School of Engineering and Applied Sciences, who is an expert in deepfakes. As Liu explained, the AI used to make deepfakes operates by manipulating countless images and video frames of a given target.

"For example, if someone wants to create a deepfake of a celebrity, they would gather thousands of images or video clips of that person from different angles, lighting conditions, and expressions," Lyu told Salon. Once the deepfaker has what they need, their data is fed into something called a deep neural network, or a computer model that has been trained to recognize a person's or object's key features and use them to generate convincing false video. Deep neural networks are iterative, meaning they will continue to improve their output as they process increased amounts of data.

Perhaps the most unsettling of all deepfake tactics is the manipulation of a person's face. Lyu explained that if a deepfaker wants to "face swap" (transpose their target's face over that of someone from an authentic video), "this involves generating a realistic image of the new face and aligning it with the original face's position, angle, and lighting conditions." By contrast, if a deepfaker merely wishes to put words in their target's mouth — known as lip-syncing — the model will alter the person's mouth movements and manipulate their voice to literally form the words that the malicious party wishes to put in their victim's mouth.

"The resulting video may need further adjustments to ensure the final video looks seamless and realistic," Lyu concluded. "This can involve blending the edges of the manipulated areas with the original footage or correcting any inconsistencies in lighting and color."

"The current state of generative AI means that often there are problems with the hands, waxy skin, unrealistic shadows, eye reflections that don't make sense in the real world."

Sam Gregory — the executive director of the human rights non-profit WITNESS, and who for five years has run a global initiative called "Prepare, Don't Panic" that focuses on preparing for the rise of deepfakes — broke down why deepfake technology has become easier and more accessible in recent years.

"One of the advances in recent years in audio and making short videos from images has been to require fewer and fewer images, or indeed just one image or just one short clip of audio, to create a simulation of a particular individual," Gregory told Salon through direct messaging. Because full-fledged face swaps require tools known as "Generative Adversarial Networks" (GAN) that use two competing neural networks to perfect the final product, "full-face deepfakes in real-life scenes remain challenging to do really well." This, however, is not true of less ambitious deepfakes, such as those which rely merely on audio or on animating a still photograph.

"Lip-sync dubbing and puppetry tools are among the most accessible — the idea being that you can make someone's lips match an audio track, or you can animate a human-looking avatar to speak," Gregory explained. "Recent advances in the broader 'generative AI' technology space include 'text-to-image' tools like Dall-E or Midjourney. These again rely on training an algorithm on massive amounts of labelled image data to then enable it to create entirely novel images." While they are not as sophisticated as GAN, they can still do the job of tricking unsuspecting consumers into believing manipulated footage.

Want more health and science stories in your inbox? Subscribe to Salon's weekly newsletter The Vulgar Scientist.

"I imagine over time these platforms will require users to acknowledge that they have the lawful use of the underlying video they are manipulating."

That all makes it seem as though we've opened Pandora's Box. Yet the situation is not entirely hopeless. For people who want to spot and call out misinformation, there are methods for identifying deepfakes.

"There are several things ordinary news consumers can do," said Shawn DuBravac, an expert on synthetic media, founder of Avrio Institute, and author of the New York Times-bestselling book "Digital Destiny: How the New Age of Data Will Transform the Way We Work, Live, and Communicate." Although he added a caveat — most people are not able to reliably detect any deepfake that is reasonably competent — DuBravac urged news consumers to nevertheless exercise basic discernment skills.

"Check the source," DuBravac told Salon. News consumers should try to verify videos — either by tracing them to their origin or by confirming their authenticity with multiple sources — instead of simply believing a compelling video because it fits with their pre-existing beliefs.

In addition, consumers might try to analyze a given video "for inconsistencies or irregularities in either the media format or the actual content. This might include examining the context of the information being conveyed." For example, in the case of the deepfake that targeted me, the anonymous creator had me refer to a Jewish place of worship as a "church," even though Jews worship at buildings called synagogues. Similarly, the anonymous creator left clues revealing its falseness; this is not uncommon among those who make and spread deepfakes, yet want to stay on the right side of the law. The anonymous creator included a faintly-visible D-ID watermark tucked into the corner "which identifies it coming from an AI generated video creation platform."

"I imagine over time these platforms will require users to acknowledge that they have the lawful use of the underlying video they are manipulating," DuBravac speculated. Even without those kinds of requirements, though, deepfakes can still include visual evidence of their fabricated nature. For instance, DuBravac noted that often in a deepfake "the mouth movements are not perfectly aligned with the spoken words." Similarly, the technology will often struggle to distinguish between a target's head and body and the background, or in general not seem to properly blend with their surroundings. These things are difficult for untrained users to spot — research finds that it is notoriously difficult — but it is not entirely impossible.

"Some activities, such as realistic full-face deepfakes in complex scenes, are still hard to do well — and are unlikely to be used for deceptive videos in, for example, local politics."

"If you're thinking about AI-generated images, the current state of generative AI means that often there are problems with the hands (for example, distortion), waxy skin, unrealistic shadows, eye reflections that don't make sense in the real world, and objects that appear to blend into other objects outside the laws of physics," Gregory told Salon. "AI tools also do not do well with directly creating text within an image, and won't for example necessarily capture the correct logo or tag for a law enforcement officer." At the same time, Gregory warned that "we shouldn't rely on these trends; these systems improve fast, get better and better, and aim to achieve a more realistic look. We know from previous experience that these 'clues' go away quickly — for example, people used to think that deepfakes didn't blink, and now they do." Consequently journalists should stay up-to-date on the evolution of deepfake technology so that, when these flaws are fixed, they can be aware of other flaws that will alert themselves and their audiences to fraudulent content.

"For example, it's helpful to know what is increasingly easy to do right now — fake audio, or create realistic images quickly — while some activities, such as realistic full-face deepfakes in complex scenes are still hard to do well, and are unlikely to be used for deceptive videos in, for example, local politics," Gregory told Salon. "This will change over time but it is part of the role of the media to keep people informed."

Since online detection tools for deepfakes are notoriously unreliable, the burden is on journalists to do the leg work.

"Most importantly, the burden and the blame shouldn't be on the viewers and individuals to detect increasingly sophisticated and indiscernible-to-the-eye fakes," Gregory said. "We can't just tell people to stare at the pixels and the details on every image and video they encounter online. We heard repeatedly in the global work and research we've done that we need to push the responsibility onto the companies developing tech, the platforms and distributors, who should watermark the content and develop provenance and detection technology that can help us see that images were synthesized, manipulated and shared and ensure those traces remain with images as they circulate."

All of these measures will take time, however, and in the interim deepfake technology will continue to evolve. This means that disturbing scenarios involving deepfakes will continue to occur — including those that seem all the world like nightmares come true.

"One particularly disturbing example of malicious use of deepfakes I have experience with is revenge pornography," Lyu told Salon, referring to the practice of creating "non-consensual explicit content, often targeting ex-spouse or ex-partner, and the victims are usually women." In these images, the victim's face is swapped with the face of an actor in a pornographic video, creating realistic-looking videos or images that appear as if the person is engaged in explicit acts. "These deepfakes are often shared online without the victim's knowledge or consent, causing significant emotional distress, reputational damage, and even legal consequences," Lyu added.

Shares