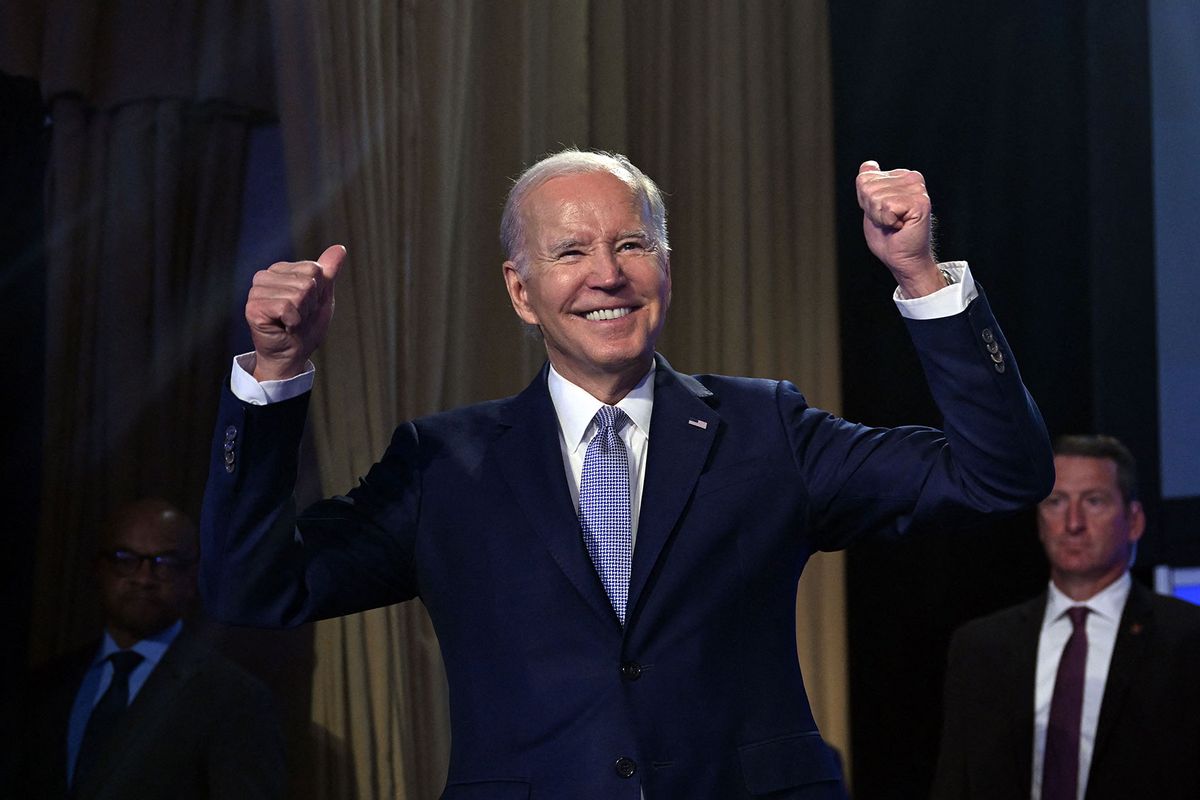

Several weeks ago, President Biden finally announced that he is seeking a second term in office. In a very well-produced video, President Biden made his case for a second term by emphasizing how Donald Trump and the Republican fascists and the MAGA movement are continuing their assaults on American democracy, personal freedom, civil rights, and a good society and that he is the leader best positioned to stop them. In all, the struggle to defend and protect American democracy is far from over and President Biden is ready "to finish the job".

How did Donald Trump and the Republican Party respond to Biden's announcement?

The Republican National Committee fired back with an artificial intelligence manipulated (AI) fake video that showed a hypothetical scenario where Biden and Harris won the 2024 Election. China, sensing Biden's "weakness" and "incompetence", attacked Taiwan and then proceeded to defeat the United States military. The video also shows scenes of financial collapse, an "invasion" by hordes of brown people across the U.S.- Mexico border, and domestic unrest with soldiers being deployed to enforce martial law in San Francisco (and presumably other "blue" cities as well).

The obviously faked video of President Biden is part of a much larger operation by the Republican Party and larger neofascist movement to use AI and other disruptive technologies to undermine the very idea of objective reality and empirical truth as part of their broader war on democracy and civil society.

To wit, after his triumphant CNN fake town hall last Wednesday, Donald Trump shared a digitally altered fake video of Anderson Cooper saying, "That was President Donald Trump ripping us a new asshole here at CNN's live presidential town hall."

For decades, the Republican Party's voters and other members of the right wing have been trained and conditioned by a vast and highly sophisticated propaganda machine (anchored by Fox "News") into believing in conspiracy theories, lies, and other distortions of facts and reality in service to the "conservative" movement's destructive revolutionary agenda.

The Republicans Party's anti-Biden fake video is the next escalation in what will likely be a much wider use of AI and related technologies by America's enemies.

The result is that Republicans, "conservatives" and other members of the right wing (including so-called "independent" voters) are predisposed and primed to accept fake and other AI-generated content as being real, however ridiculous and absurd such media and other information may be, if it conforms to their already distorted views of political and social reality (see: "fake news" and "alternative facts" and other Republican-fascist Orwellian Newspeak).

Kayla Gogarty, who is deputy research director at Media Matters, provided this context in an email to Salon:

Deep fakes, AI, and other forms of manipulated media are a predictable escalation of disinformation, especially since there are instances even prior to 2020 in which platforms allowed manipulated videos of Democrats, including then-House Speaker Nancy Pelosi, to spread across social media. Right-wing media and politicians have a vast ecosystem that can amplify misinformation and misleading talking points, and manipulated media is just the latest form for it.

Gogarty continues, "Social media platforms largely have policies against deep fakes and other forms of manipulated media that mislead the public, but these policies are vague and inconsistently enforced. Given the fact that platforms continue to struggle with other forms of misinformation, they are clearly unprepared for this moment in which the GOP seemingly plans to use AI and other forms of manipulated media, and the platforms will likely also struggle to prevent it from spreading on their platforms."

Want a daily wrap-up of all the news and commentary Salon has to offer? Subscribe to our morning newsletter, Crash Course.

The Republican Party and larger neofascist movement's use of AI and other new digital technologies are part of a much larger global project by anti-democracy and other malign actors to undermine reality and the truth as a way of creating chaos and confusion among the mass public, an outcome which will, in turn, serve to delegitimize democracy as an effective form of governance.

Mollie Saltskog, who is a Senior Intelligence Analyst at The Soufan Group, explained to Salon what we know about these threats:

At the beginning of 2023, The Soufan Center published an IntelBrief looking at 2022 disinformation trends with a view to better understand how the threat posed by disinformation to democracies may evolve in 2023. We noted that in 2022 threat actors that utilized disinformation campaigns for their own political goals continued to innovate through relatively low-tech capabilities. For example, following the early 2022 ban in the European Union of Russian state-backed media outlets know to spread disinformation, like RT, Kremlin-backed/aligned disinformation actors innovated to get around the ban. They did so by impersonating European news outlets on Facebook to spread pro-Kremlin narratives about the war in Ukraine.

When looking at 2023, however, our analysis noted that we should expect innovation in the areas of emerging technology to aid not only more rapid, but also more sophisticated disinformation campaigns. We specifically highlighted the areas of AI-powered language models that can be used to generate fake information sources at great speed in different languages, with lower probability of errors than if humans were drafting the text, posts, comments, articles etc. Deepfakes are, of course, also highly concerning. Especially given that people tend to trust and believe more in what they can see rather than just read or hear about—this is what makes audiovisual manipulation so concerning.

Saltskog emphasizes how the Republicans Party's anti-Biden fake video is the next escalation in what will likely be a much wider use of AI and related technologies by America's enemies:

I think this most recent example of the RNC video utilizing AI-generated imagery illustrates that leading up to the 2024 general election, we should expect and prepare for that not only our foreign adversaries—like Russia, China, and Iran—but also domestic actors will seek to deploy AI and ML-powered audiovisual content that has been manipulated. What becomes highly problematic when domestic political actors seek to utilize disinformation, including potentially using Deepfakes, is that they are playing into the hands of our foreign adversaries, like Russia and China.

Our adversaries want nothing more than to showcase to the world that democracy is flawed.

While technological innovation continues to lower the bar for state actors, proxies, and non-state actors to generate deepfakes—which is an issue that we certainly need to focus on in and of itself—so called "cheap fakes" can also be highly disruptive to our society and democracy. Cheap fakes are audiovisual materials, like a video, that has been tampered with by a human using accessible and cheaper technology, like video-editing software. Contrast this to, for example, a Deepfake video that is created by machine learning, which is something that requires more technical resources. We've already seen cheap fakes be deployed in political contexts.

What do the American people think about AI technology?

As shown by a recent poll from Change Research, a majority of Americans are curious about AI technology and its implications for their lives (both positively and negatively) but would like the government to do more in terms of monitoring and regulating it.

As part of their poll, Change Research also conducted an experiment that confirmed how underprepared and vulnerable the average American is to being manipulated by AI technology:

42% of voters were confident they could tell the difference between AI-generated content and human-generated, but in our tests, they did no better than a coin flip.

When we asked voters to differentiate between statements promoting AI that were written by us and by AI, most respondents threw up their hands and were unable to tell the difference.

88% express concern with the ability for people to use artificial intelligence to make it seem like elected officials, government officials, and others are saying things they are not saying.

People have equal levels of concern about being misled by AI and being misled by politicians. As many people (44%) are as worried about people using artificial intelligence to create fake videos of candidates and elected officials (43%) as they are about candidates and elected officials saying things that are not true and you not being able to tell what is true and what is not (44%).

We asked people to watch a short video that people created and put on YouTube that had President Biden saying ridiculous things during his most recent state of the union (https://youtu.be/8QcbRM0Zq_c). More people found this video more concerning than amusing (45%) than the other way around (28%).

These findings reinforce Saltskog's deep concerns about the potentially dire impact of political disinformation and other sophisticated propaganda campaigns on America's politics and larger society:

In the short term, looking at 2024, there is, sadly, only so much we can do and I'm not optimistic that we are prepared—as a society—to really combat any type of disinformation campaign effectively, whether it is technically sophisticated or not. The immediate order of business would actually be for all of society—government, political parties, tech platforms, private sector, NGOs, academia—to come together and realize that it hurts everyone: our societal fabric, democracy, the bottom-line, political missions and goals, freedom of speech and academic freedoms. Actually, the only ones who benefit from disinformation campaigns targeting the American people are our adversaries. Right now, the issue of disinformation and misinformation is so highly politicized that it complicates the efforts by the U.S. governments to effectively address this on their own. And I don't think we should or can exclusively rely on the government to address this national security challenge. We need everyone to pitch in.

In this sense we should also work together on how emerging technology can be part of the solution. See, technology in and of itself is not inherently good or bad. It is used for good or bad. I know, for example, of some truly fantastic technological innovations that help fact check, monitor, and ultimately combat the spread of fake information. Take TruePic, for example, which utilizes technology to enhance transparency of content, like authenticating an image or video that was captured by detailing if or how it has been edited, manipulated, or otherwise. AI and machine learning can also be used to monitor and analyze disinformation campaigns from foreign adversaries at scale—something that we deploy in our research to understand tactics, strategies, actors, capabilities and other key indicators needed to map the threat and trends, as well as inform policy recommendations. So there are also examples of when emerging technology can be very helpful, which gives me hope.

Even after 8 years of experience with the Age of Trump and ascendant fascism, the American news media, the pundits and commentariot, and the responsible political class as a whole, are largely continuing to operate under the rules and expectations of normal politics. In this framework, the Democrats and Republicans, "conservatives" and liberals, are presented as being reasonable alternatives to one another and co-partners in responsible governance who ultimately respect the country's political institutions and norms — even if the two party's leaders and their voters happen to be highly "polarized" and engaged in a "culture war".

Thus, the mainstream news media slavish commitment (and outright laziness) to "bothsideism", "balance", "fairness", "objectivity" and other frameworks and approaches such as "access journalism" and horse race coverage that normalize the Republican fascists (be it Donald Trump or Ron DeSantis) and their ongoing attempts to end the country's multiracial pluralistic democracy.

With the rise of AI and other sophisticated and emerging technologies, the mainstream news media and political class must confront the challenge of how reality itself, beyond Trump's and the larger white right's embrace of the Big Lie and the right-wing echo chamber and its many layers of disinformation and misinformation, is being manipulated and distorted in ways that resemble a dystopic science fiction movie.

In such a political and social environment simply "reporting the facts" and "the news" and "letting the public decide" are insufficient. Moreover, such decisions are de facto acts of surrender to the Republican fascists and larger global right-wing and other malign actors who only care about power and possess utter contempt for the truth and the facts.

In an attempt to better orient myself — and perhaps steal some hope — I asked Darrell West, who is a Senior Fellow in the Center for Technology Innovation of the Governance Studies program at the Brookings Institution, to share his thoughts about AI technology and what comes next for American politics in this moment of democracy crisis.

We are at a dangerous point in American democracy with extremism rising and disinformation spreading false narratives that enrage people and make it difficult to address important problems. Technology is a major part of our current dysfunction because new tools such as deep fakes and generative AI democratize disinformation and give everyone the capabilities of troll farms. Nearly anyone can spread false information to large numbers of people and the tools have become so sophisticated it is difficult even for experts to distinguish the fake from the real. When people don't know what to believe, it becomes a breeding grounds for public mistrust and mass manipulation.

The GOP's recent ad is the leading edge of what is likely to become a tsunami of fake videos and scary images in the upcoming election. The airwaves and digital platforms will be flooded with videos, audiotapes, and pictures alleging candidates are doing or saying bad things and it will be difficult to correct the record. These materials will be spread rapidly to millions of people while no one pays attention to the fact-checkers. The risk is fake materials will alter how people see the candidates and the campaign and they could affect who does well in 2024. It is likely to be a close race and it is impossible to know what information may nudge swing voters one way or another.

There are no guardrails in place to protect people from all the disinformation they will encounter. Candidates can say whatever they want, even if what they say is false, without any limitations. Courts give candidates broad leeway on speech and there are almost no limitations on the kinds of materials candidates or their supporters can disseminate. It will be a challenge next year and a half as we grapple with all the competing claims in what is one of the most high-stakes election in recent times. American democracy is on the line and it may be decided by false claims and inaccurate beliefs.

If you are not already afraid you should be.

By all indications, the 2024 presidential election will be very close and President Biden's victory over Donald Trump or some other Republican candidate is far from guaranteed. The use of AI and other new digital technologies will be a central feature of this struggle for the country's democracy and its future. Unfortunately, the Republican fascists and other malign actors are far ahead in that arms race and the Democrats are standing in place.

The Republican fascists and their forces are waging a war for the future across all areas of American life and society while the Democrats and other pro-democracy forces are largely still lost and confused in the Trumpocene, that malignant reality and fascist fever dream, and holding on to hopes of a return to "normal" that will not be coming back.

Shares